Measuring Robustness in Deep Learning Based Compressive Sensing

Paper and Code

Feb 11, 2021

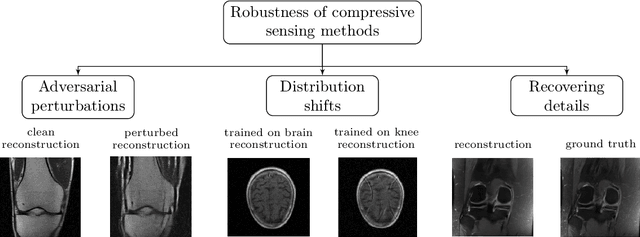

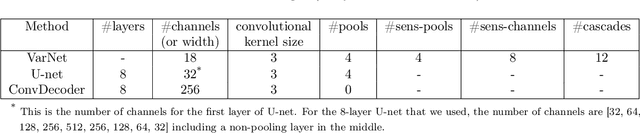

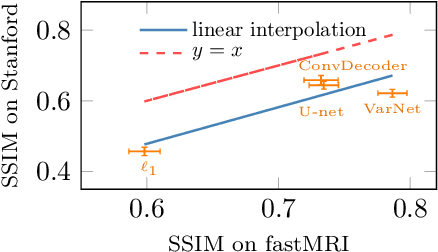

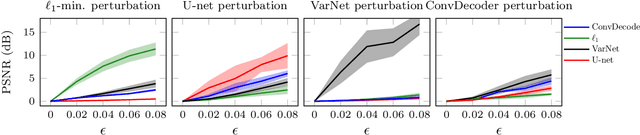

Deep neural networks give state-of-the-art performance for inverse problems such as reconstructing images from few and noisy measurements, a problem arising in accelerated magnetic resonance imaging (MRI). However, recent works have raised concerns that deep-learning-based image reconstruction methods are sensitive to perturbations and are less robust than traditional methods: Neural networks (i) may be sensitive to small, yet adversarially-selected perturbations, (ii) may perform poorly under distribution shifts, and (iii) may fail to recover small but important features in the image. In order to understand whether neural networks are sensitive to such perturbations, in this work, we measure the robustness of different approaches for image reconstruction including trained neural networks, un-trained networks, and traditional sparsity-based methods. We find, contrary to prior works, that both trained and un-trained methods are vulnerable to adversarial perturbations. Moreover, we find that both trained and un-trained methods tuned for a particular dataset suffer very similarly from distribution shifts. Finally, we demonstrate that an image reconstruction method that achieves higher reconstruction accuracy, also performs better in terms of accurately recovering fine details. Thus, the current state-of-the-art deep-learning-based image reconstruction methods enable a performance gain over traditional methods without compromising robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge