Mean-Field Controllability and Decentralized Stabilization of Markov Chains, Part II: Asymptotic Controllability and Polynomial Feedbacks

Paper and Code

Mar 28, 2017

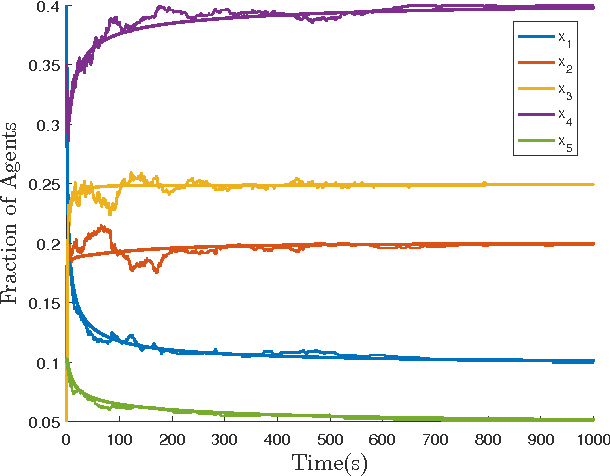

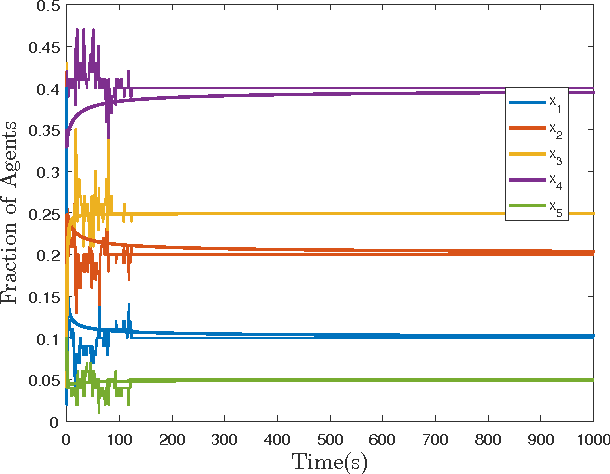

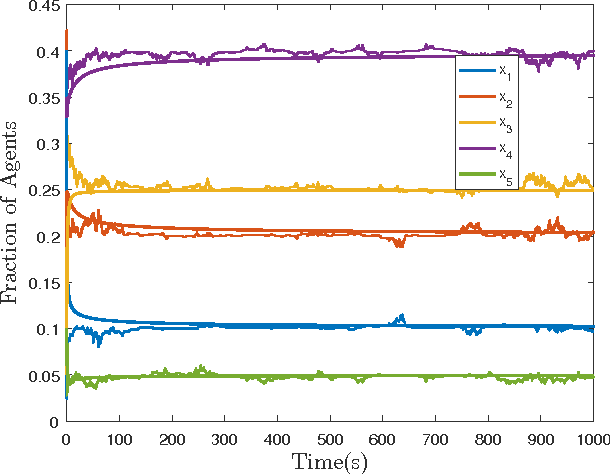

This paper, the second of a two-part series, presents a method for mean-field feedback stabilization of a swarm of agents on a finite state space whose time evolution is modeled as a continuous time Markov chain (CTMC). The resulting (mean-field) control problem is that of controlling a nonlinear system with desired global stability properties. We first prove that any probability distribution with a strongly connected support can be stabilized using time-invariant inputs. Secondly, we show the asymptotic controllability of all possible probability distributions, including distributions that assign zero density to some states and which do not necessarily have a strongly connected support. Lastly, we demonstrate that there always exists a globally asymptotically stabilizing decentralized density feedback law with the additional property that the control inputs are zero at equilibrium, whenever the graph is strongly connected and bidirected. Then the problem of synthesizing closed-loop polynomial feedback is framed as a optimization problem using state-of-the-art sum-of-squares optimization tools. The optimization problem searches for polynomial feedback laws that make the candidate Lyapunov function a stability certificate for the resulting closed-loop system. Our methodology is tested for two cases on a five vertex graph, and the stabilization properties of the constructed control laws are validated with numerical simulations of the corresponding system of ordinary differential equations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge