Markov-Lipschitz Deep Learning

Paper and Code

Jun 21, 2020

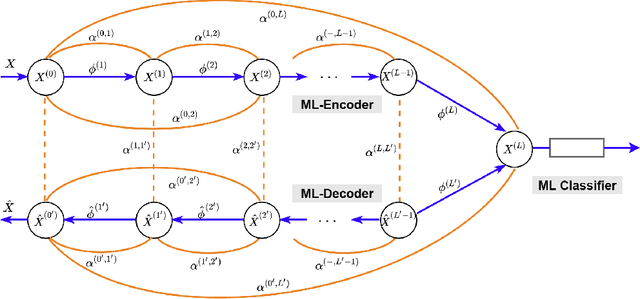

In this paper, we propose a novel framework, called Markov-Lipschitz deep learning (MLDL), for manifold learning and data generation. It enhances layer-wise transformations of neural networks by optimizing isometry (geometry-preserving) and stability of the mappings. A prior constraint of locally isometric smoothness (LIS) is imposed across-layers and encoded via a Markov random field (MRF)-Gibbs distribution, and consequently layer-wise vector transformations are replaced by LIS-constrained metric homeomorphisms. These lead to the best possible solutions as measured by locally geometric distortion and locally bi-Lipschitz continuity. Extensive experiments, comparisons and ablation study demonstrate significant advantages of MLDL for manifold learning and data generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge