Manifold Regularized Deep Neural Networks using Adversarial Examples

Paper and Code

Jan 14, 2016

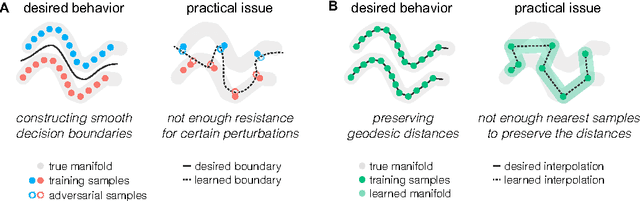

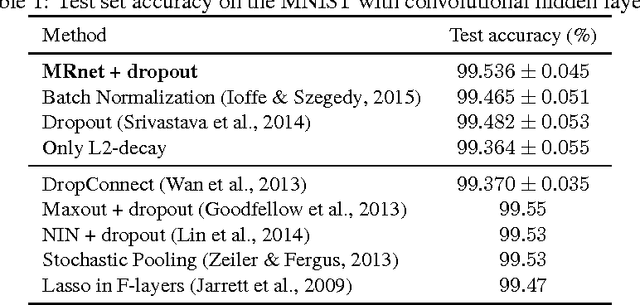

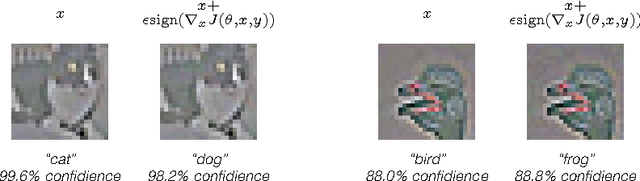

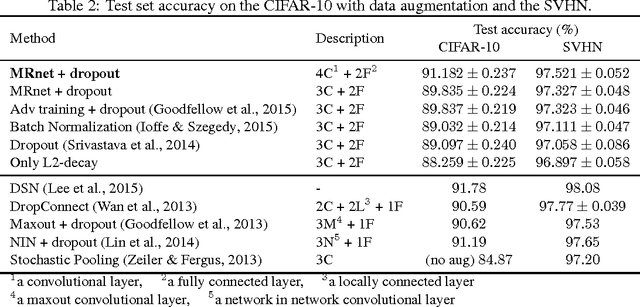

Learning meaningful representations using deep neural networks involves designing efficient training schemes and well-structured networks. Currently, the method of stochastic gradient descent that has a momentum with dropout is one of the most popular training protocols. Based on that, more advanced methods (i.e., Maxout and Batch Normalization) have been proposed in recent years, but most still suffer from performance degradation caused by small perturbations, also known as adversarial examples. To address this issue, we propose manifold regularized networks (MRnet) that utilize a novel training objective function that minimizes the difference between multi-layer embedding results of samples and those adversarial. Our experimental results demonstrated that MRnet is more resilient to adversarial examples and helps us to generalize representations on manifolds. Furthermore, combining MRnet and dropout allowed us to achieve competitive classification performances for three well-known benchmarks: MNIST, CIFAR-10, and SVHN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge