Magic3DSketch: Create Colorful 3D Models From Sketch-Based 3D Modeling Guided by Text and Language-Image Pre-Training

Paper and Code

Jul 27, 2024

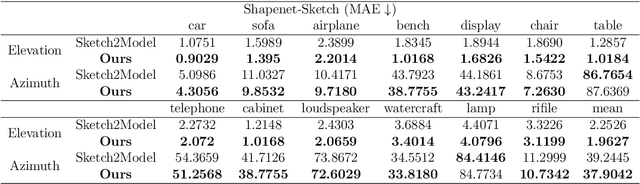

The requirement for 3D content is growing as AR/VR application emerges. At the same time, 3D modelling is only available for skillful experts, because traditional methods like Computer-Aided Design (CAD) are often too labor-intensive and skill-demanding, making it challenging for novice users. Our proposed method, Magic3DSketch, employs a novel technique that encodes sketches to predict a 3D mesh, guided by text descriptions and leveraging external prior knowledge obtained through text and language-image pre-training. The integration of language-image pre-trained neural networks complements the sparse and ambiguous nature of single-view sketch inputs. Our method is also more useful and offers higher degree of controllability compared to existing text-to-3D approaches, according to our user study. Moreover, Magic3DSketch achieves state-of-the-art performance in both synthetic and real dataset with the capability of producing more detailed structures and realistic shapes with the help of text input. Users are also more satisfied with models obtained by Magic3DSketch according to our user study. Additionally, we are also the first, to our knowledge, add color based on text description to the sketch-derived shapes. By combining sketches and text guidance with the help of language-image pretrained models, our Magic3DSketch can allow novice users to create custom 3D models with minimal effort and maximum creative freedom, with the potential to revolutionize future 3D modeling pipelines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge