MagDR: Mask-guided Detection and Reconstruction for Defending Deepfakes

Paper and Code

Mar 26, 2021

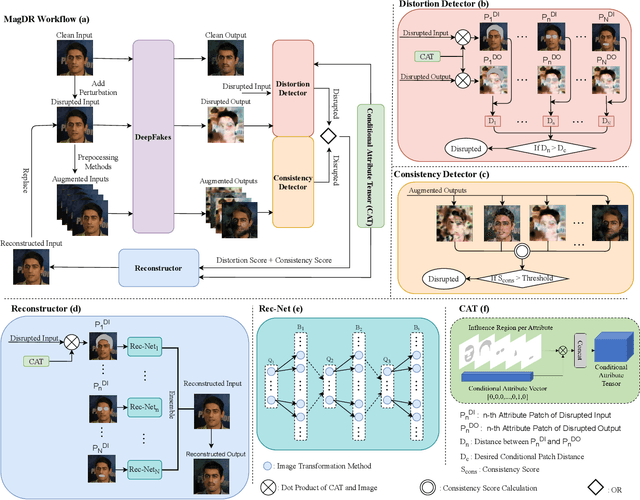

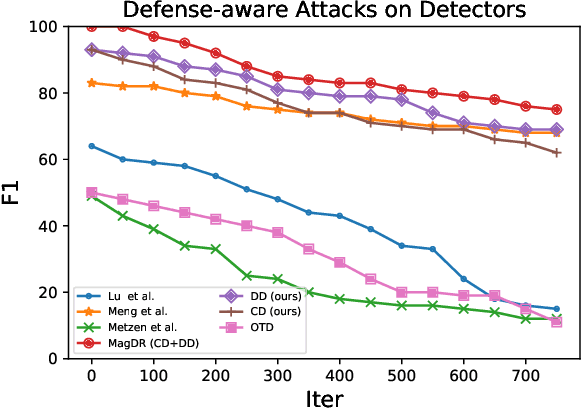

Deepfakes raised serious concerns on the authenticity of visual contents. Prior works revealed the possibility to disrupt deepfakes by adding adversarial perturbations to the source data, but we argue that the threat has not been eliminated yet. This paper presents MagDR, a mask-guided detection and reconstruction pipeline for defending deepfakes from adversarial attacks. MagDR starts with a detection module that defines a few criteria to judge the abnormality of the output of deepfakes, and then uses it to guide a learnable reconstruction procedure. Adaptive masks are extracted to capture the change in local facial regions. In experiments, MagDR defends three main tasks of deepfakes, and the learned reconstruction pipeline transfers across input data, showing promising performance in defending both black-box and white-box attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge