Machine Unlearning on Pre-trained Models by Residual Feature Alignment Using LoRA

Paper and Code

Nov 13, 2024

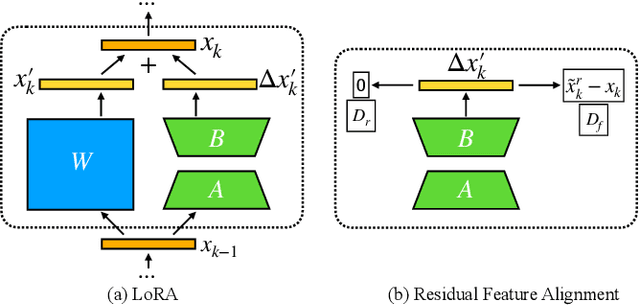

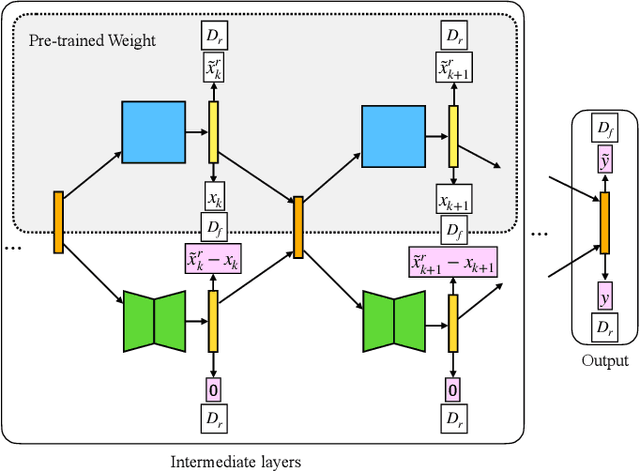

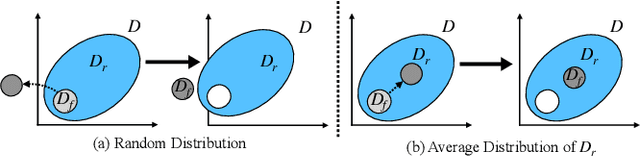

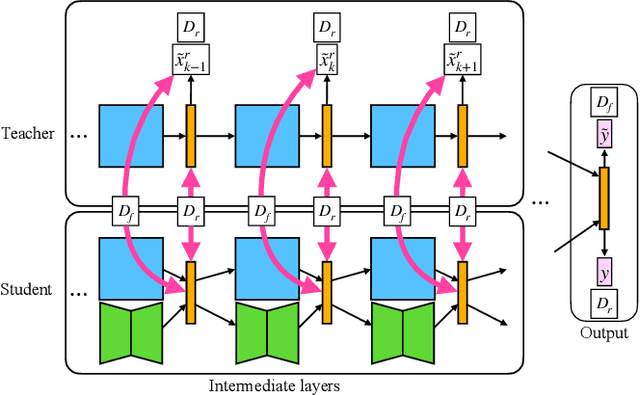

Machine unlearning is new emerged technology that removes a subset of the training data from a trained model without affecting the model performance on the remaining data. This topic is becoming increasingly important in protecting user privacy and eliminating harmful or outdated data. The key challenge lies in effectively and efficiently unlearning specific information without compromising the model's utility on the retained data. For the pre-trained models, fine-tuning is an important way to achieve the unlearning target. Previous work typically fine-tuned the entire model's parameters, which incurs significant computation costs. In addition, the fine-tuning process may cause shifts in the intermediate layer features, affecting the model's overall utility. In this work, we propose a novel and efficient machine unlearning method on pre-trained models. We term the method as Residual Feature Alignment Unlearning. Specifically, we leverage LoRA (Low-Rank Adaptation) to decompose the model's intermediate features into pre-trained features and residual features. By adjusting the residual features, we align the unlearned model with the pre-trained model at the intermediate feature level to achieve both unlearning and remaining targets. The method aims to learn the zero residuals on the retained set and shifted residuals on the unlearning set. Extensive experiments on numerous datasets validate the effectiveness of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge