Machine learning in spectral domain

Paper and Code

May 29, 2020

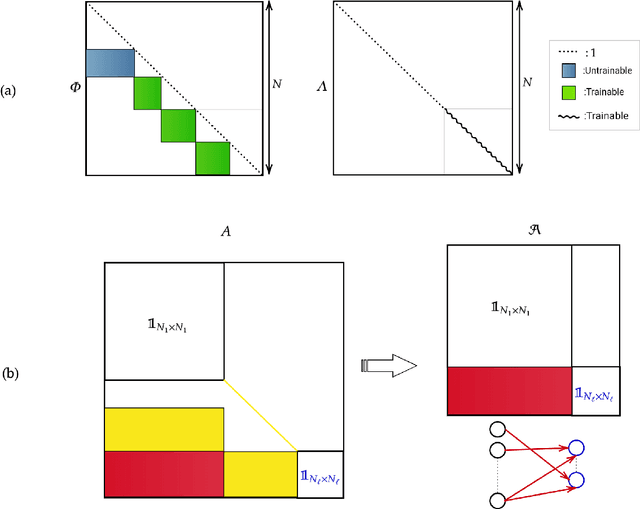

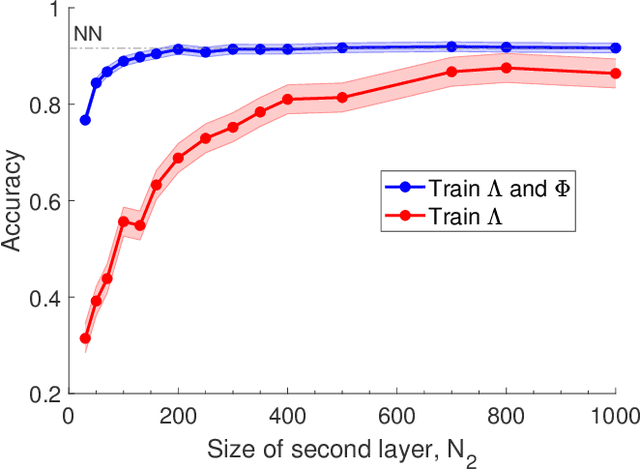

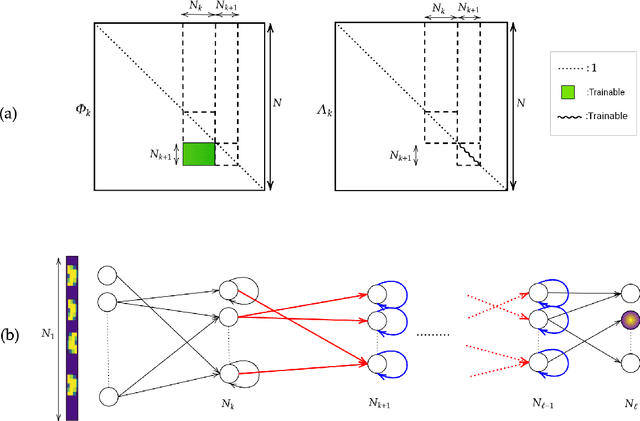

Deep neural networks are usually trained in the space of the nodes, by adjusting the weights of existing links via suitable optimization protocols. We here propose a radically new approach which anchors the learning process to reciprocal space. Specifically, the training acts on the spectral domain and seeks to modify the eigenvectors and eigenvalues of transfer operators in direct space. The proposed method is ductile and can be tailored to return either linear or non linear classifiers. The performance are competitive with standard schemes, while allowing for a significant reduction of the learning parameter space. Spectral learning restricted to eigenvalues could be also employed for pre-training of the deep neural network, in conjunction with conventional machine-learning schemes. Further, it is surmised that the nested indentation of eigenvectors that defines the core idea of spectral learning could help understanding why deep networks work as well as they do.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge