LP-BERT: Multi-task Pre-training Knowledge Graph BERT for Link Prediction

Paper and Code

Jan 13, 2022

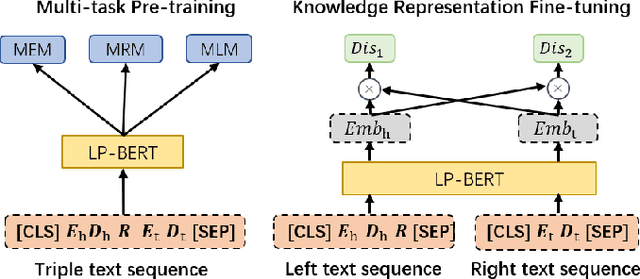

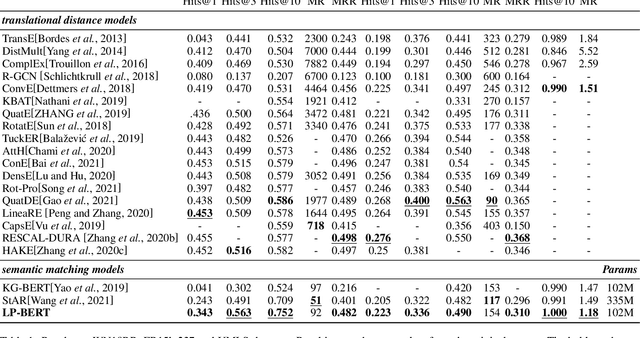

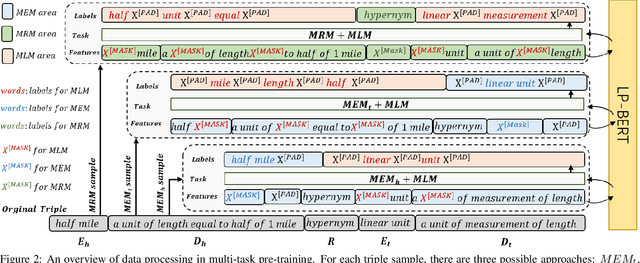

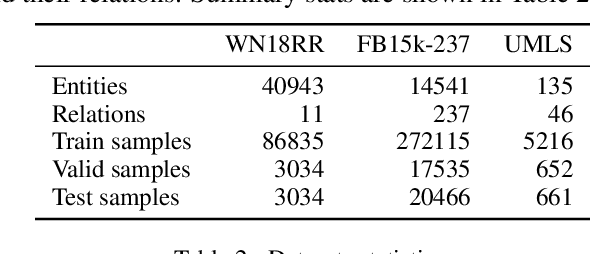

Link prediction plays an significant role in knowledge graph, which is an important resource for many artificial intelligence tasks, but it is often limited by incompleteness. In this paper, we propose knowledge graph BERT for link prediction, named LP-BERT, which contains two training stages: multi-task pre-training and knowledge graph fine-tuning. The pre-training strategy not only uses Mask Language Model (MLM) to learn the knowledge of context corpus, but also introduces Mask Entity Model (MEM) and Mask Relation Model (MRM), which can learn the relationship information from triples by predicting semantic based entity and relation elements. Structured triple relation information can be transformed into unstructured semantic information, which can be integrated into the pre-training model together with context corpus information. In the fine-tuning phase, inspired by contrastive learning, we carry out a triple-style negative sampling in sample batch, which greatly increased the proportion of negative sampling while keeping the training time almost unchanged. Furthermore, we propose a data augmentation method based on the inverse relationship of triples to further increase the sample diversity. We achieve state-of-the-art results on WN18RR and UMLS datasets, especially the Hits@10 indicator improved by 5\% from the previous state-of-the-art result on WN18RR dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge