Low-rank Label Propagation for Semi-supervised Learning with 100 Millions Samples

Paper and Code

Feb 28, 2017

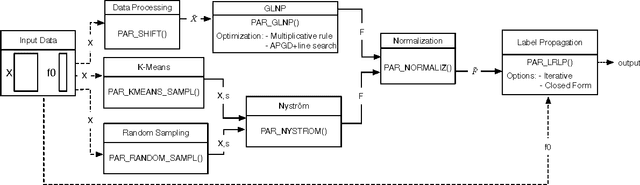

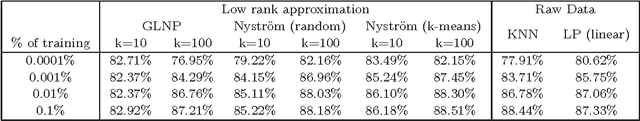

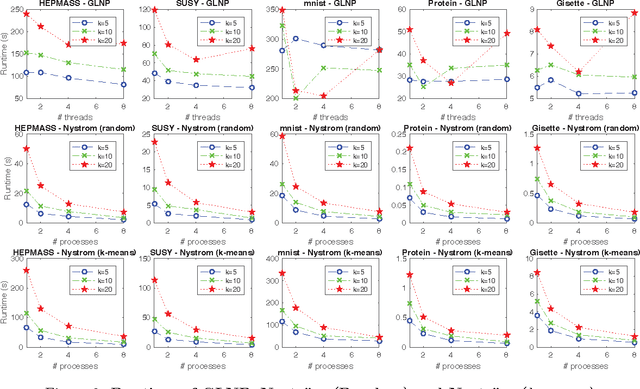

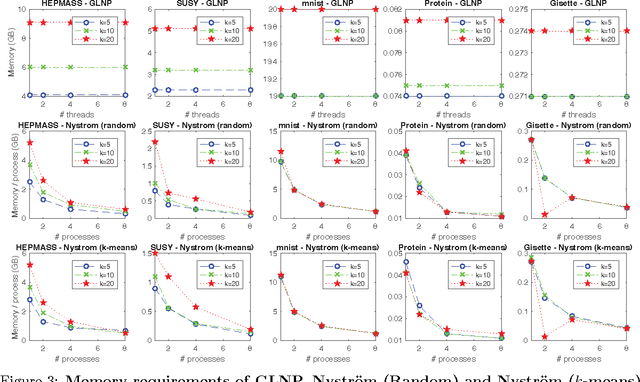

The success of semi-supervised learning crucially relies on the scalability to a huge amount of unlabelled data that are needed to capture the underlying manifold structure for better classification. Since computing the pairwise similarity between the training data is prohibitively expensive in most kinds of input data, currently, there is no general ready-to-use semi-supervised learning method/tool available for learning with tens of millions or more data points. In this paper, we adopted the idea of two low-rank label propagation algorithms, GLNP (Global Linear Neighborhood Propagation) and Kernel Nystr\"om Approximation, and implemented the parallelized version of the two algorithms accelerated with Nesterov's accelerated projected gradient descent for Big-data Label Propagation (BigLP). The parallel algorithms are tested on five real datasets ranging from 7000 to 10,000,000 in size and a simulation dataset of 100,000,000 samples. In the experiments, the implementation can scale up to datasets with 100,000,000 samples and hundreds of features and the algorithms also significantly improved the prediction accuracy when only a very small percentage of the data is labeled. The results demonstrate that the BigLP implementation is highly scalable to big data and effective in utilizing the unlabeled data for semi-supervised learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge