Low-level Vision by Consensus in a Spatial Hierarchy of Regions

Paper and Code

Apr 14, 2015

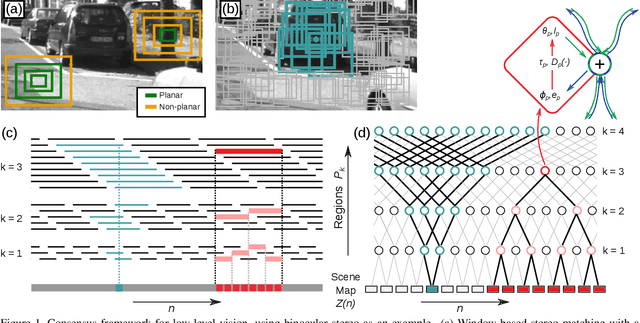

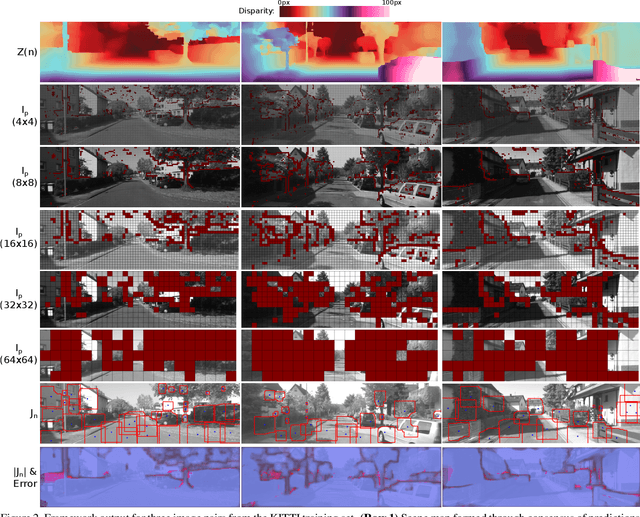

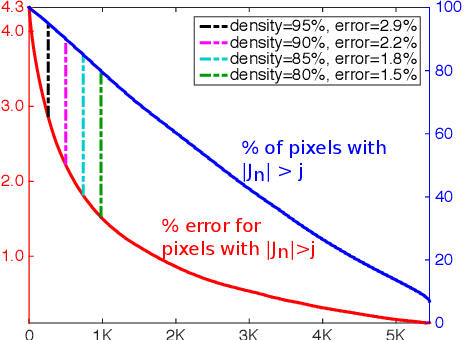

We introduce a multi-scale framework for low-level vision, where the goal is estimating physical scene values from image data---such as depth from stereo image pairs. The framework uses a dense, overlapping set of image regions at multiple scales and a "local model," such as a slanted-plane model for stereo disparity, that is expected to be valid piecewise across the visual field. Estimation is cast as optimization over a dichotomous mixture of variables, simultaneously determining which regions are inliers with respect to the local model (binary variables) and the correct co-ordinates in the local model space for each inlying region (continuous variables). When the regions are organized into a multi-scale hierarchy, optimization can occur in an efficient and parallel architecture, where distributed computational units iteratively perform calculations and share information through sparse connections between parents and children. The framework performs well on a standard benchmark for binocular stereo, and it produces a distributional scene representation that is appropriate for combining with higher-level reasoning and other low-level cues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge