Low-Discrepancy Points via Energetic Variational Inference

Paper and Code

Nov 21, 2021

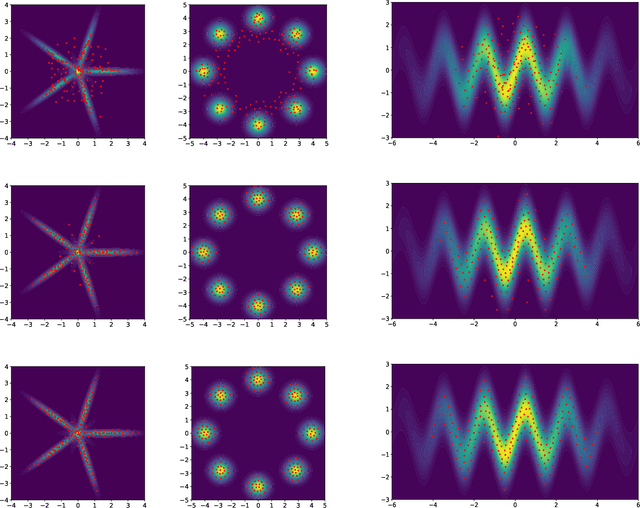

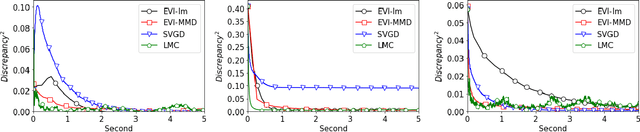

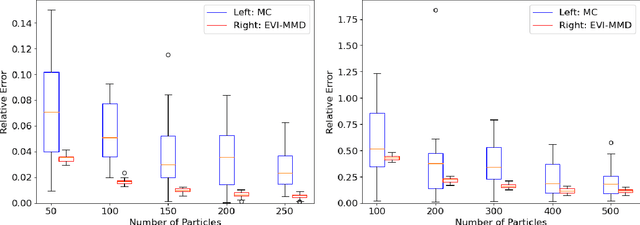

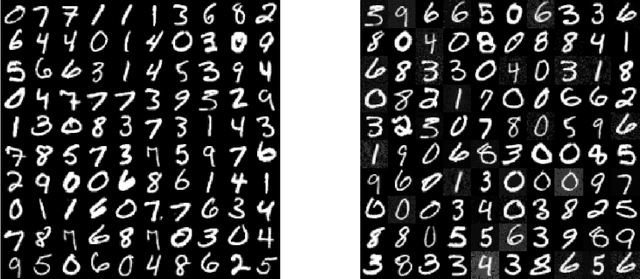

In this paper, we propose a deterministic variational inference approach and generate low-discrepancy points by minimizing the kernel discrepancy, also known as the Maximum Mean Discrepancy or MMD. Based on the general energetic variational inference framework by Wang et. al. (2021), minimizing the kernel discrepancy is transformed to solving a dynamic ODE system via the explicit Euler scheme. We name the resulting algorithm EVI-MMD and demonstrate it through examples in which the target distribution is fully specified, partially specified up to the normalizing constant, and empirically known in the form of training data. Its performances are satisfactory compared to alternative methods in the applications of distribution approximation, numerical integration, and generative learning. The EVI-MMD algorithm overcomes the bottleneck of the existing MMD-descent algorithms, which are mostly applicable to two-sample problems. Algorithms with more sophisticated structures and potential advantages can be developed under the EVI framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge