Lossy Image Compression with Recurrent Neural Networks: from Human Perceived Visual Quality to Classification Accuracy

Paper and Code

Oct 08, 2019

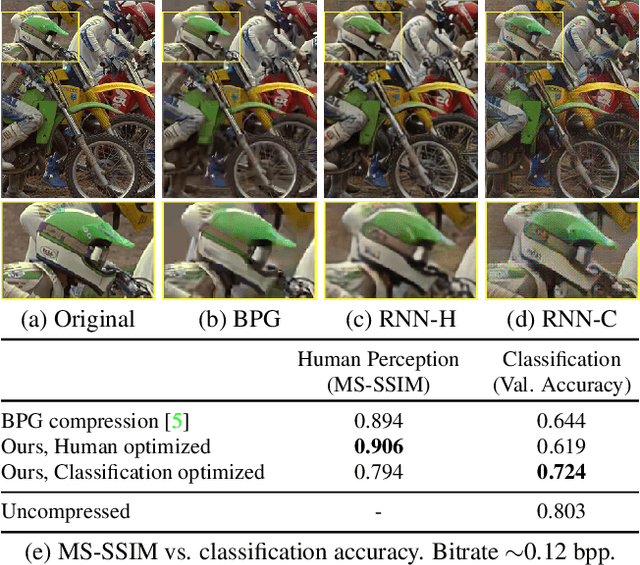

Deep neural networks have recently advanced the state-of-the-art in image compression and surpassed many traditional compression algorithms. The training of such networks involves carefully trading off entropy of the latent representation against reconstruction quality. The term quality crucially depends on the observer of the images which, in the vast majority of literature, is assumed to be human. In this paper, we go beyond this notion of quality and look at human visual perception and machine perception simultaneously. To that end, we propose a family of loss functions that allows to optimize deep image compression depending on the observer and to interpolate between human perceived visual quality and classification accuracy. Our experiments show that our proposed training objectives result in compression systems that, when trained with machine friendly loss, preserve accuracy much better than the traditional codecs BPG, WebP and JPEG, without requiring fine-tuning of inference algorithms on decoded images and independent of the classifier architecture. At the same time, when using the human friendly loss, we achieve competitive performance in terms of MS-SSIM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge