Look into the Future: Deep Contextualized Sequential Recommendation

Paper and Code

May 23, 2024

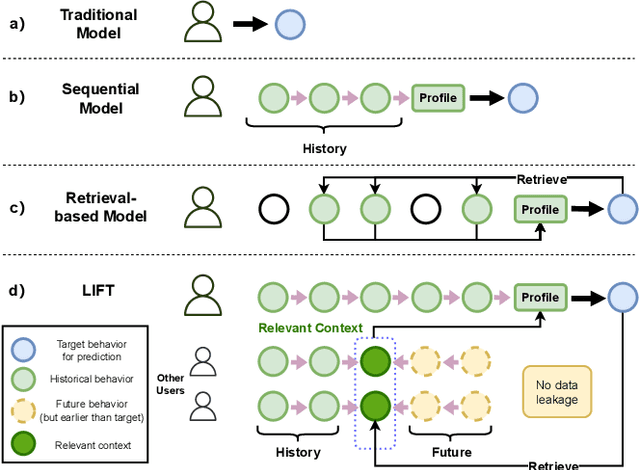

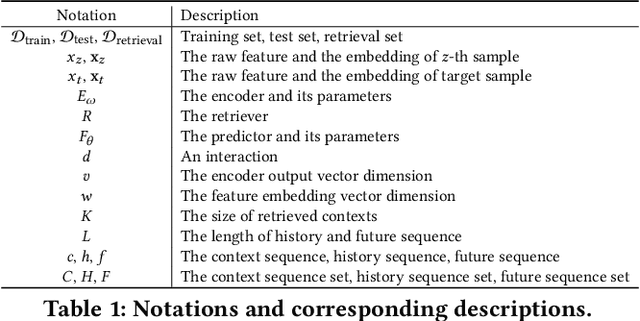

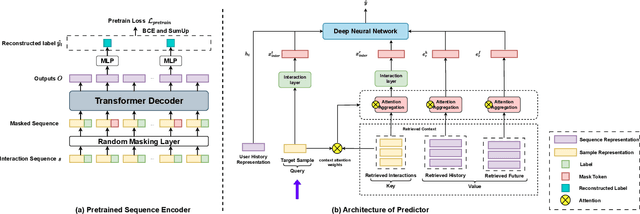

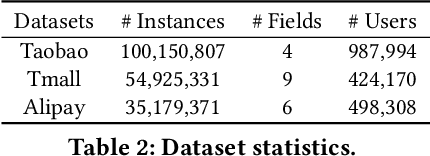

Sequential recommendation focuses on mining useful patterns from the user behavior history to better estimate his preference on the candidate items. Previous solutions adopt recurrent networks or retrieval methods to obtain the user's profile representation so as to perform the preference estimation. In this paper, we propose a novel framework of sequential recommendation called Look into the Future (LIFT), which builds and leverages the contexts of sequential recommendation. The context in LIFT refers to a user's current profile that can be represented based on both past and future behaviors. As such, the learned context will be more effective in predicting the user's behaviors in sequential recommendation. Apparently, it is impossible to use real future information to predict the current behavior, we thus propose a novel retrieval-based framework to use the most similar interaction's future information as the future context of the target interaction without data leakage. Furthermore, in order to exploit the intrinsic information embedded within the context itself, we introduce an innovative pretraining methodology incorporating behavior masking. This approach is designed to facilitate the efficient acquisition of context representations. We demonstrate that finding relevant contexts from the global user pool via retrieval methods will greatly improve preference estimation performance. In our extensive experiments over real-world datasets, LIFT demonstrates significant performance improvement on click-through rate prediction tasks in sequential recommendation over strong baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge