Logically-Constrained Neural Fitted Q-Iteration

Paper and Code

Sep 20, 2018

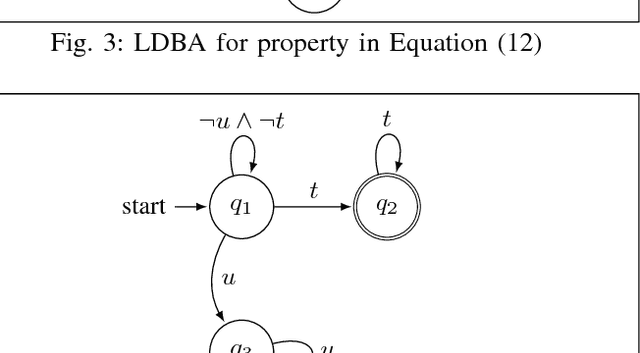

This paper proposes a method for efficient training of the Q-function for continuous-state Markov Decision Processes (MDP), such that the traces of the resulting policies satisfy a Linear Temporal Logic (LTL) property. The logical property is converted into a limit deterministic Buchi automaton with which a product MDP is constructed. The control policy is then synthesized by a reinforcement learning algorithm assuming that no prior knowledge is available from the MDP. The proposed method is evaluated in a numerical study to test the quality of the generated control policy and is compared against conventional methods for policy synthesis such as MDP abstraction (Voronoi quantizer) and approximate dynamic programming (fitted value iteration).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge