Locally Adaptive Transfer Learning Algorithms for Large-Scale Multiple Testing

Paper and Code

Mar 25, 2022

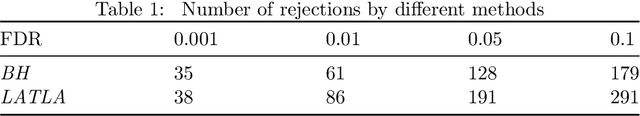

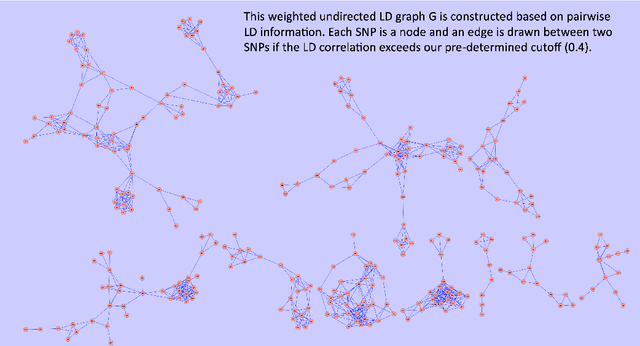

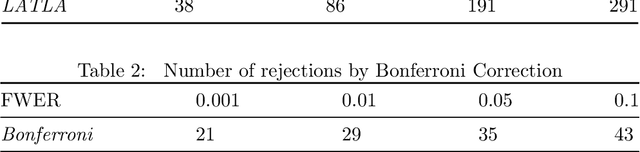

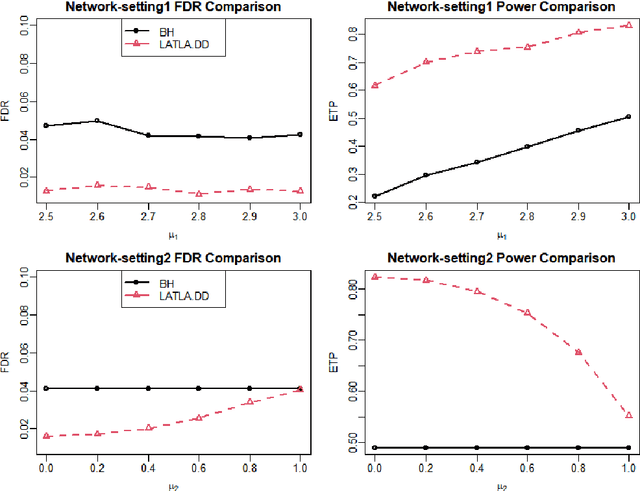

Transfer learning has enjoyed increasing popularity in a range of big data applications. In the context of large-scale multiple testing, the goal is to extract and transfer knowledge learned from related source domains to improve the accuracy of simultaneously testing of a large number of hypotheses in the target domain. This article develops a locally adaptive transfer learning algorithm (LATLA) for transfer learning for multiple testing. In contrast with existing covariate-assisted multiple testing methods that require the auxiliary covariates to be collected alongside the primary data on the same testing units, LATLA provides a principled and generic transfer learning framework that is capable of incorporating multiple samples of auxiliary data from related source domains, possibly in different dimensions/structures and from diverse populations. Both the theoretical and numerical results show that LATLA controls the false discovery rate and outperforms existing methods in power. LATLA is illustrated through an application to genome-wide association studies for the identification of disease-associated SNPs by cross-utilizing the auxiliary data from a related linkage analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge