Lifelong Object Detection

Paper and Code

Sep 02, 2020

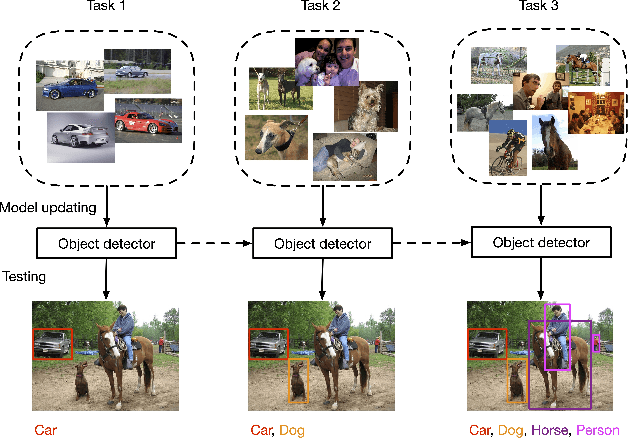

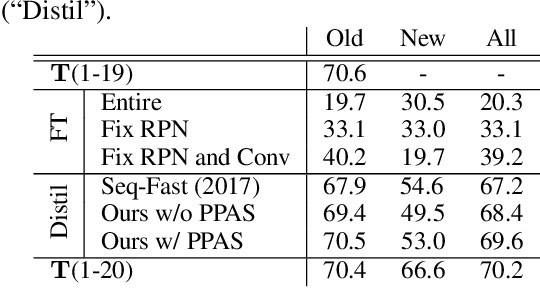

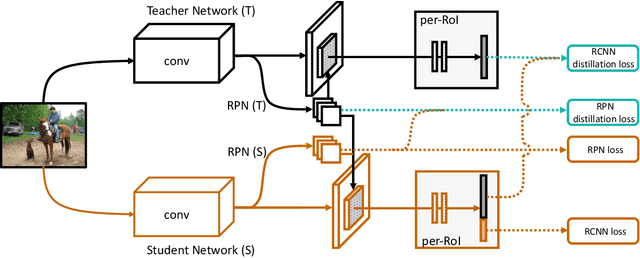

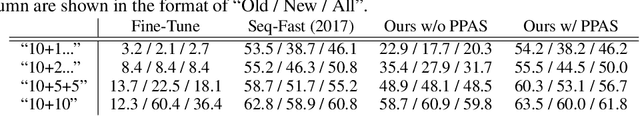

Recent advances in object detection have benefited significantly from rapid developments in deep neural networks. However, neural networks suffer from the well-known issue of catastrophic forgetting, which makes continual or lifelong learning problematic. In this paper, we leverage the fact that new training classes arrive in a sequential manner and incrementally refine the model so that it additionally detects new object classes in the absence of previous training data. Specifically, we consider the representative object detector, Faster R-CNN, for both accurate and efficient prediction. To prevent abrupt performance degradation due to catastrophic forgetting, we propose to apply knowledge distillation on both the region proposal network and the region classification network, to retain the detection of previously trained classes. A pseudo-positive-aware sampling strategy is also introduced for distillation sample selection. We evaluate the proposed method on PASCAL VOC 2007 and MS COCO benchmarks and show competitive mAP and 6x inference speed improvement, which makes the approach more suitable for real-time applications. Our implementation will be publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge