Learning to Grasp 3D Objects using Deep Residual U-Nets

Paper and Code

Feb 10, 2020

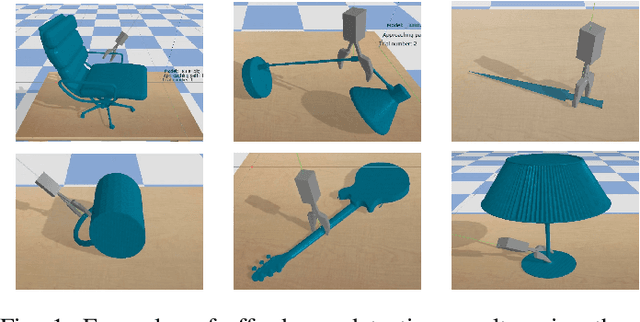

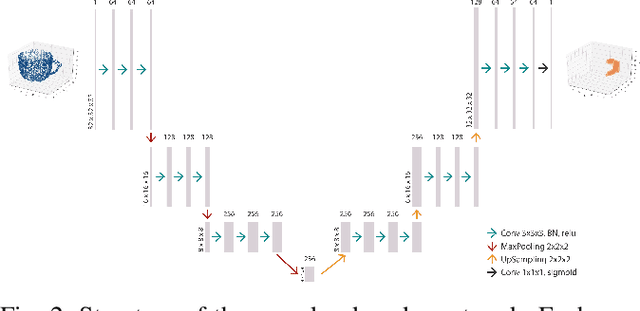

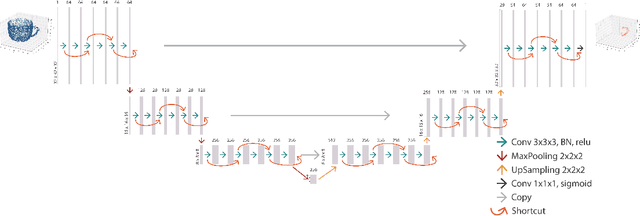

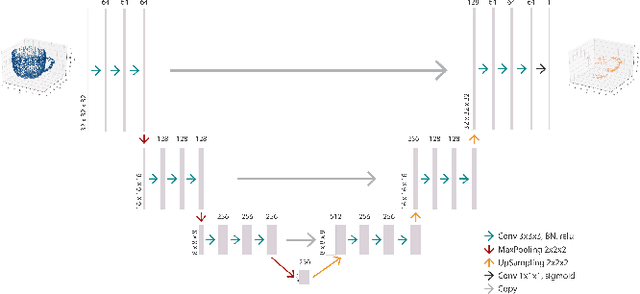

Affordance detection is one of the challenging tasks in robotics because it must predict the grasp configuration for the object of interest in real-time to enable the robot to interact with the environment. In this paper, we present a new deep learning approach to detect object affordances for a given 3D object. The method trains a Convolutional Neural Network (CNN) to learn a set of grasping features from RGB-D images. We named our approach \emph{Res-U-Net} since the architecture of the network is designed based on U-Net structure and residual network-styled blocks. It devised to be robust and efficient to compute and use. A set of experiments has been performed to assess the performance of the proposed approach regarding grasp success rate on simulated robotic scenarios. Experiments validate the promising performance of the proposed architecture on a subset of ShapeNetCore dataset and simulated robot scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge