Learning to Adapt Classifier for Imbalanced Semi-supervised Learning

Paper and Code

Jul 28, 2022

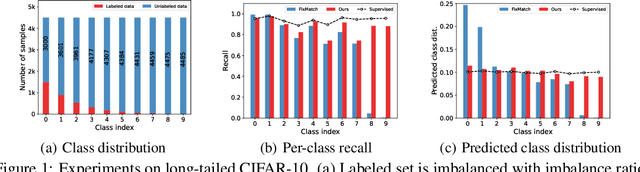

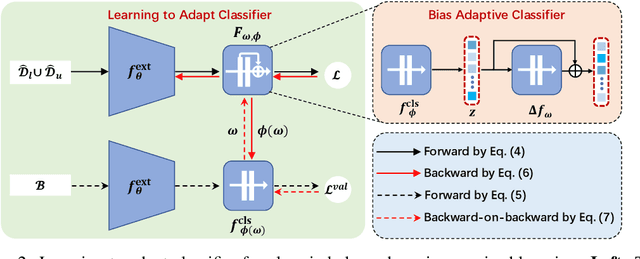

Pseudo-labeling has proven to be a promising semi-supervised learning (SSL) paradigm. Existing pseudo-labeling methods commonly assume that the class distributions of training data are balanced. However, such an assumption is far from realistic scenarios and existing pseudo-labeling methods suffer from severe performance degeneration in the context of class-imbalance. In this work, we investigate pseudo-labeling under imbalanced semi-supervised setups. The core idea is to automatically assimilate the training bias arising from class-imbalance, using a bias adaptive classifier that equips the original linear classifier with a bias attractor. The bias attractor is designed to be a light-weight residual network for adapting to the training bias. Specifically, the bias attractor is learned through a bi-level learning framework such that the bias adaptive classifier is able to fit imbalanced training data, while the linear classifier can give unbiased label prediction for each class. We conduct extensive experiments under various imbalanced semi-supervised setups, and the results demonstrate that our method can be applicable to different pseudo-labeling models and superior to the prior arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge