Learning Through Retrospection: Improving Trajectory Prediction for Automated Driving with Error Feedback

Paper and Code

Apr 18, 2025

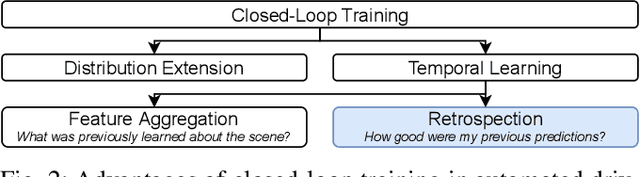

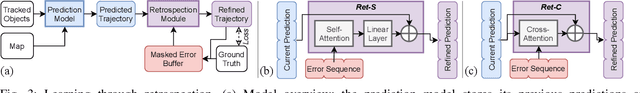

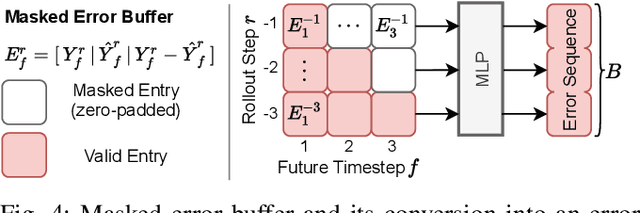

In automated driving, predicting trajectories of surrounding vehicles supports reasoning about scene dynamics and enables safe planning for the ego vehicle. However, existing models handle predictions as an instantaneous task of forecasting future trajectories based on observed information. As time proceeds, the next prediction is made independently of the previous one, which means that the model cannot correct its errors during inference and will repeat them. To alleviate this problem and better leverage temporal data, we propose a novel retrospection technique. Through training on closed-loop rollouts the model learns to use aggregated feedback. Given new observations it reflects on previous predictions and analyzes its errors to improve the quality of subsequent predictions. Thus, the model can learn to correct systematic errors during inference. Comprehensive experiments on nuScenes and Argoverse demonstrate a considerable decrease in minimum Average Displacement Error of up to 31.9% compared to the state-of-the-art baseline without retrospection. We further showcase the robustness of our technique by demonstrating a better handling of out-of-distribution scenarios with undetected road-users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge