Learning the Update Operator for 2D/3D Image Registration

Paper and Code

Feb 04, 2021

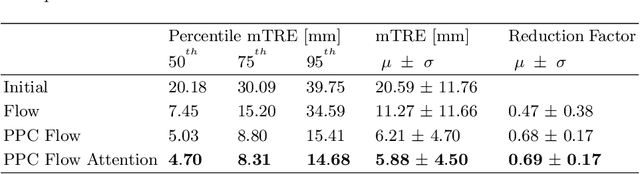

Image guidance in minimally invasive interventions is usually provided using live 2D X-ray imaging. To enhance the information available during the intervention, the preoperative volume can be overlaid over the 2D images using 2D/3D image registration. Recently, deep learning-based 2D/3D registration methods have shown promising results by improving computational efficiency and robustness. However, there is still a gap in terms of registration accuracy compared to traditional optimization-based methods. We aim to address this gap by incorporating traditional methods in deep neural networks using known operator learning. As an initial step in this direction, we propose to learn the update step of an iterative 2D/3D registration framework based on the Point-to-Plane Correspondence model. We embed the Point-to-Plane Correspondence model as a known operator in our deep neural network and learn the update step for the iterative registration. We show an improvement of 1.8 times in terms of registration accuracy for the update step prediction compared to learning without the known operator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge