Learning Robust Feedback Policies from Demonstrations

Paper and Code

Mar 30, 2021

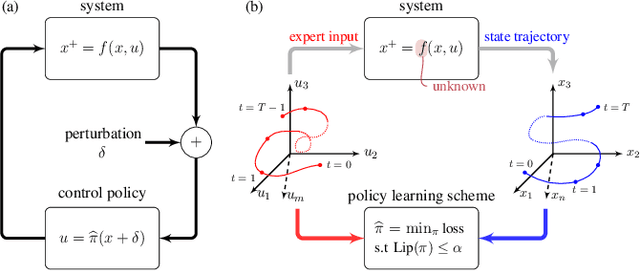

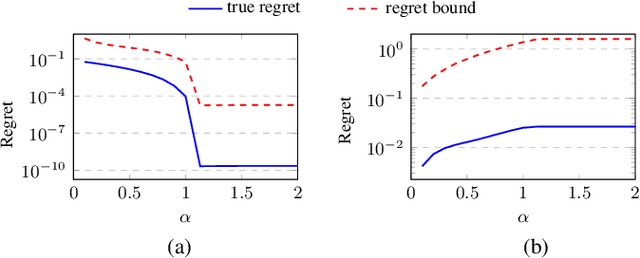

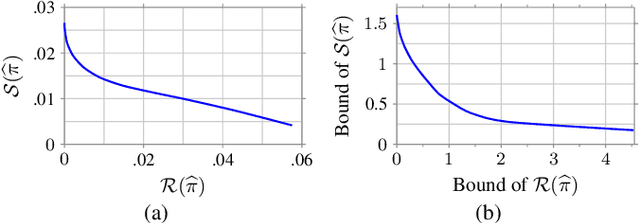

In this work we propose and analyze a new framework to learn feedback control policies that exhibit provable guarantees on the closed-loop performance and robustness to bounded (adversarial) perturbations. These policies are learned from expert demonstrations without any prior knowledge of the task, its cost function, and system dynamics. In contrast to the existing algorithms in imitation learning and inverse reinforcement learning, we use a Lipschitz-constrained loss minimization scheme to learn control policies with certified robustness. We establish robust stability of the closed-loop system under the learned control policy and derive an upper bound on its regret, which bounds the sub-optimality of the closed-loop performance with respect to the expert policy. We also derive a robustness bound for the deterioration of the closed-loop performance under bounded (adversarial) perturbations on the state measurements. Ultimately, our results suggest the existence of an underlying tradeoff between nominal closed-loop performance and adversarial robustness, and that improvements in nominal closed-loop performance can only be made at the expense of robustness to adversarial perturbations. Numerical results validate our analysis and demonstrate the effectiveness of our robust feedback policy learning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge