Learning Pose Estimation for UAV Autonomous Navigation andLanding Using Visual-Inertial Sensor Data

Paper and Code

Dec 10, 2019

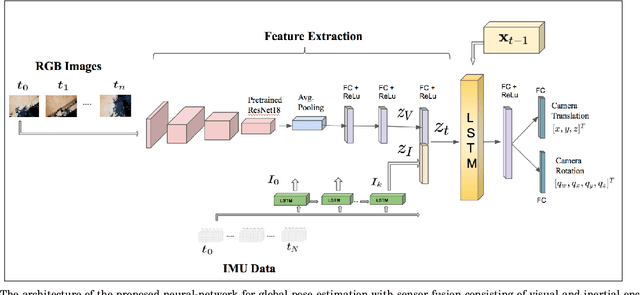

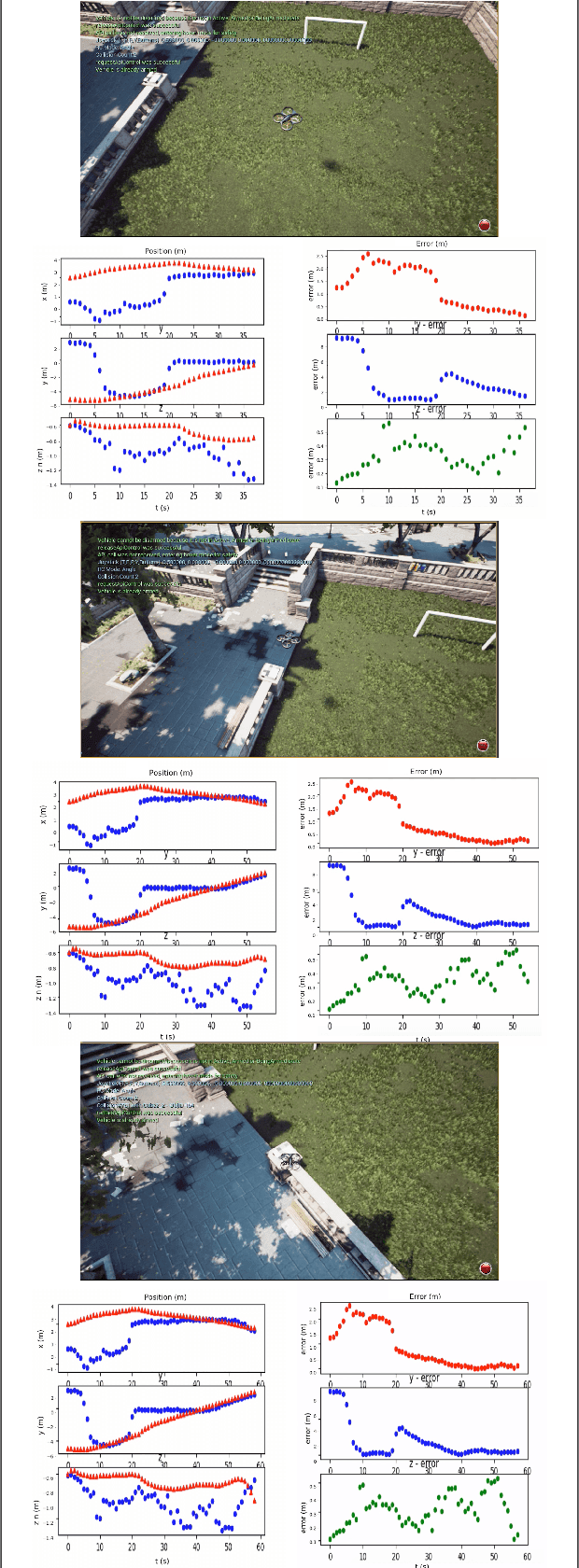

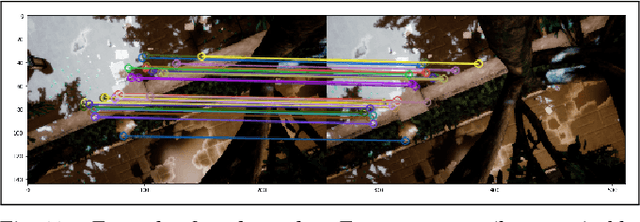

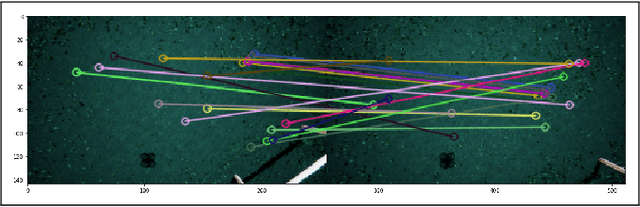

In this work, we propose a robust network-in-the-loop control system that allows an Unmanned-Aerial-Vehicles to navigate and land autonomously ona desired target. To estimate the global pose of theaerial vehicle, we develop a deep neural network ar-chitecture for visual-inertial odometry, which providesa robust alternative to traditional techniques for au-tonomous navigation of Unmanned-Aerial-Vehicles. Wefirst provide experimental results on the accuracy ofthe estimation by comparing the prediction of our modelto traditional visual-inertial approaches on the publiclyavailable EuRoC MAV dataset. The results indicate aclear improvement in the accuracy of the pose estima-tion up to 25% against the baseline. Second, we useAirsim, a simulator available as a plugin for UnrealEngine, to create new datasets of photorealistic imagesand inertial measurement to train and test our model.We finally integrate the proposed architecture for globallocalization with the Airsim closed-loop control system,and we provide simulation results for the autonomouslanding of the aerial vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge