Learning Natural Language Generation from Scratch

Paper and Code

Sep 20, 2021

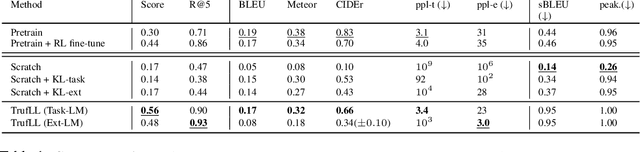

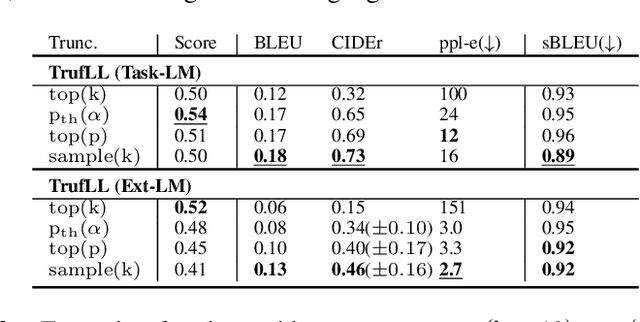

This paper introduces TRUncated ReinForcement Learning for Language (TrufLL), an original ap-proach to train conditional language models from scratch by only using reinforcement learning (RL). AsRL methods unsuccessfully scale to large action spaces, we dynamically truncate the vocabulary spaceusing a generic language model. TrufLL thus enables to train a language agent by solely interacting withits environment without any task-specific prior knowledge; it is only guided with a task-agnostic languagemodel. Interestingly, this approach avoids the dependency to labelled datasets and inherently reduces pre-trained policy flaws such as language or exposure biases. We evaluate TrufLL on two visual questiongeneration tasks, for which we report positive results over performance and language metrics, which wethen corroborate with a human evaluation. To our knowledge, it is the first approach that successfullylearns a language generation policy (almost) from scratch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge