Learning Long Range Dependencies on Graphs via Random Walks

Paper and Code

Jun 05, 2024

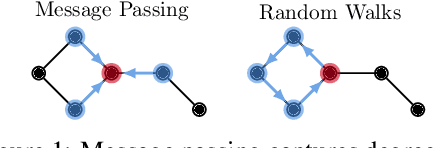

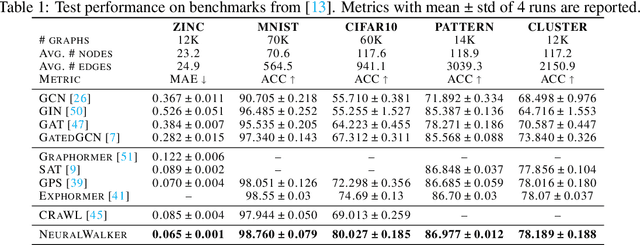

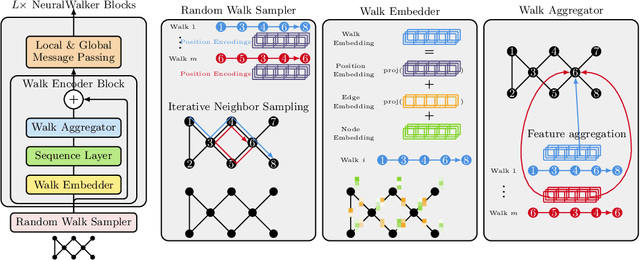

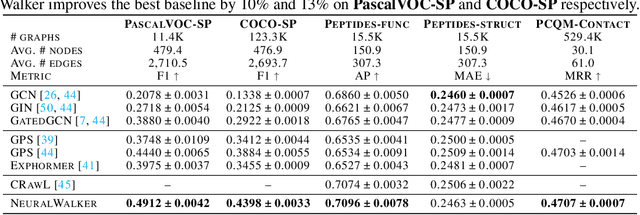

Message-passing graph neural networks (GNNs), while excelling at capturing local relationships, often struggle with long-range dependencies on graphs. Conversely, graph transformers (GTs) enable information exchange between all nodes but oversimplify the graph structure by treating them as a set of fixed-length vectors. This work proposes a novel architecture, NeuralWalker, that overcomes the limitations of both methods by combining random walks with message passing. NeuralWalker achieves this by treating random walks as sequences, allowing for the application of recent advances in sequence models in order to capture long-range dependencies within these walks. Based on this concept, we propose a framework that offers (1) more expressive graph representations through random walk sequences, (2) the ability to utilize any sequence model for capturing long-range dependencies, and (3) the flexibility by integrating various GNN and GT architectures. Our experimental evaluations demonstrate that NeuralWalker achieves significant performance improvements on 19 graph and node benchmark datasets, notably outperforming existing methods by up to 13% on the PascalVoc-SP and COCO-SP datasets. Code is available at https://github.com/BorgwardtLab/NeuralWalker.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge