Learning Invariant Color Features for Person Re-Identification

Paper and Code

Oct 09, 2014

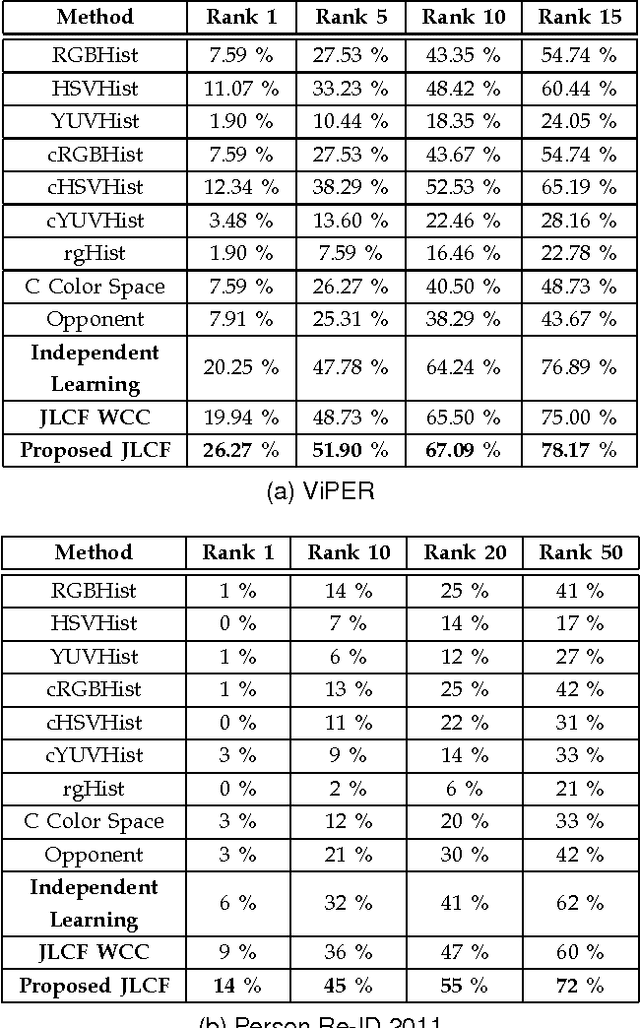

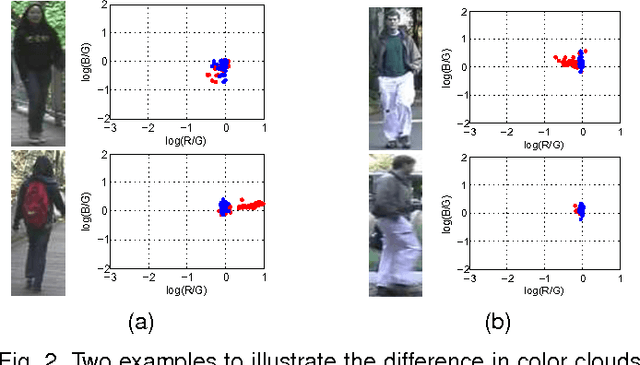

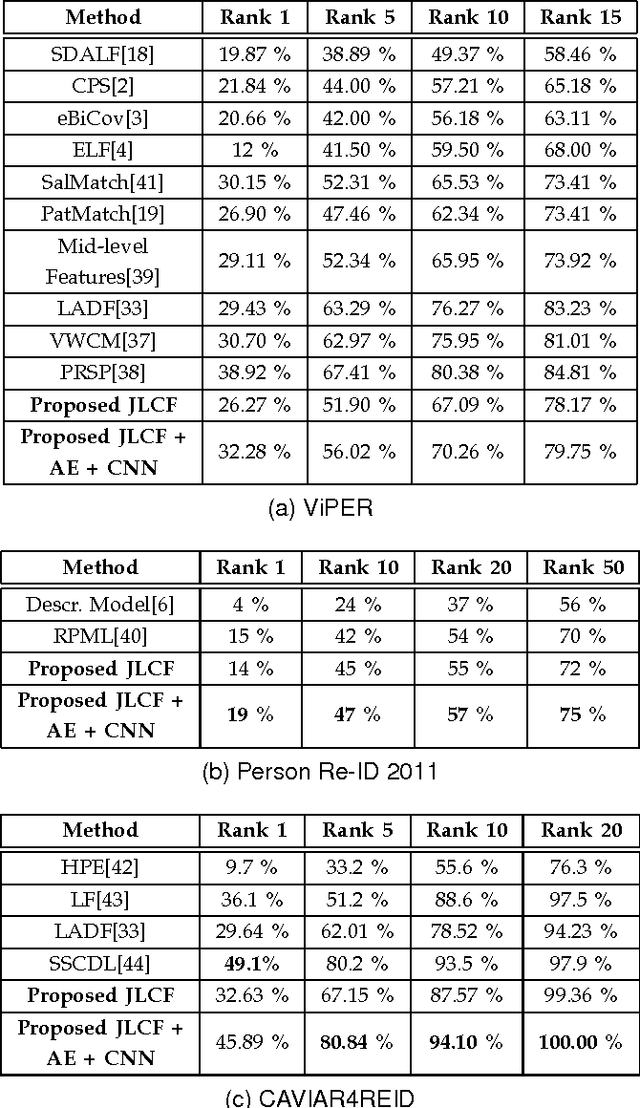

Matching people across multiple camera views known as person re-identification, is a challenging problem due to the change in visual appearance caused by varying lighting conditions. The perceived color of the subject appears to be different with respect to illumination. Previous works use color as it is or address these challenges by designing color spaces focusing on a specific cue. In this paper, we propose a data driven approach for learning color patterns from pixels sampled from images across two camera views. The intuition behind this work is that, even though pixel values of same color would be different across views, they should be encoded with the same values. We model color feature generation as a learning problem by jointly learning a linear transformation and a dictionary to encode pixel values. We also analyze different photometric invariant color spaces. Using color as the only cue, we compare our approach with all the photometric invariant color spaces and show superior performance over all of them. Combining with other learned low-level and high-level features, we obtain promising results in ViPER, Person Re-ID 2011 and CAVIAR4REID datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge