Learning Continuous 3D Reconstructions for Geometrically Aware Grasping

Paper and Code

Oct 02, 2019

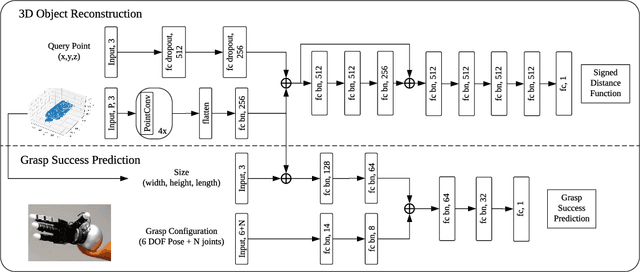

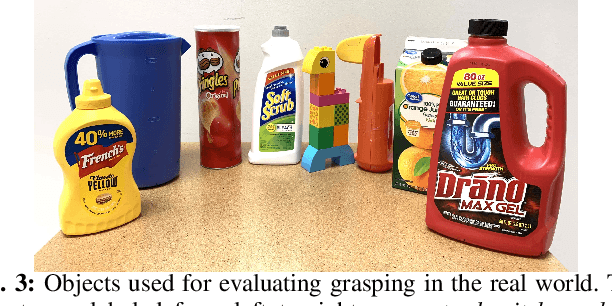

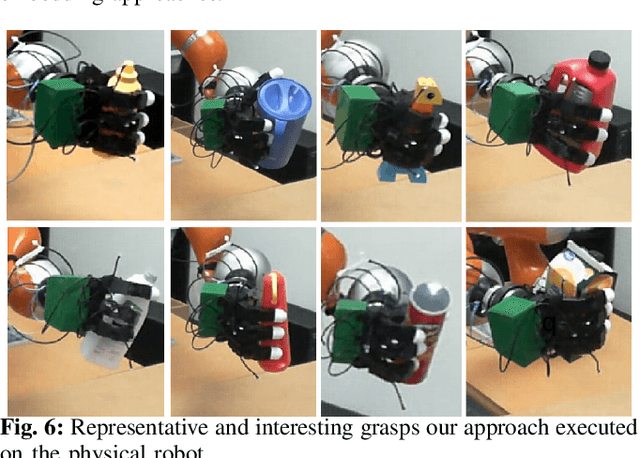

Deep learning has enabled remarkable improvements in grasp synthesis for previously unseen objects viewed from partial views. However, existing approaches lack the ability to explicitly reason about the full 3D geometry of the object when selecting a grasp, relying on indirect geometric reasoning derived when learning grasp success networks. This abandons common sense geometric reasoning, such as avoiding undesired robot object collisions. We propose to utilize a novel, learned 3D reconstruction to enable geometric awareness in a grasping system. We leverage the structure of the reconstruction network to learn a grasp success classifier which serves as the objective function for a continuous grasp optimization. We additionally explicitly constrain the optimization to avoid undesired contact, directly using the reconstruction. By using the reconstruction network, our method can grasp objects from a new camera viewpoint which was not seen during training. Our results show that utilizing learned geometry outperforms alternative formulations for partial-view information based on real robot execution. Our results can be found on https://sites.google.com/view/reconstruction-grasp/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge