Learning Communication Policies for Different Follower Behaviors in a Collaborative Reference Game

Paper and Code

Feb 07, 2024

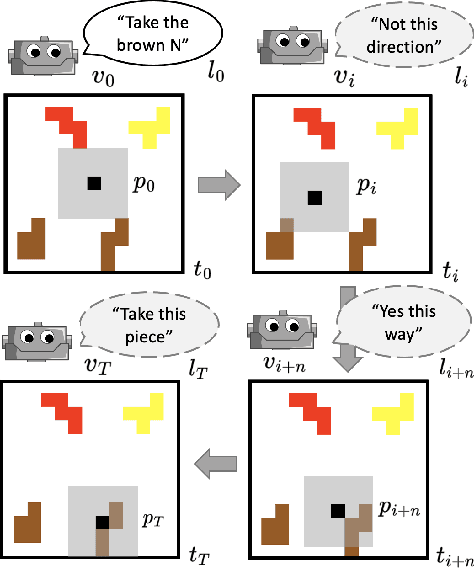

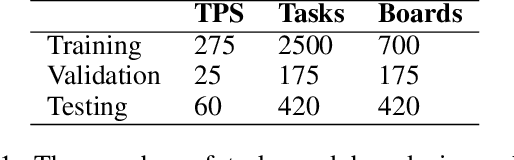

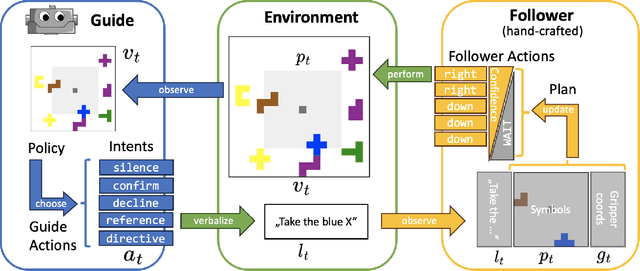

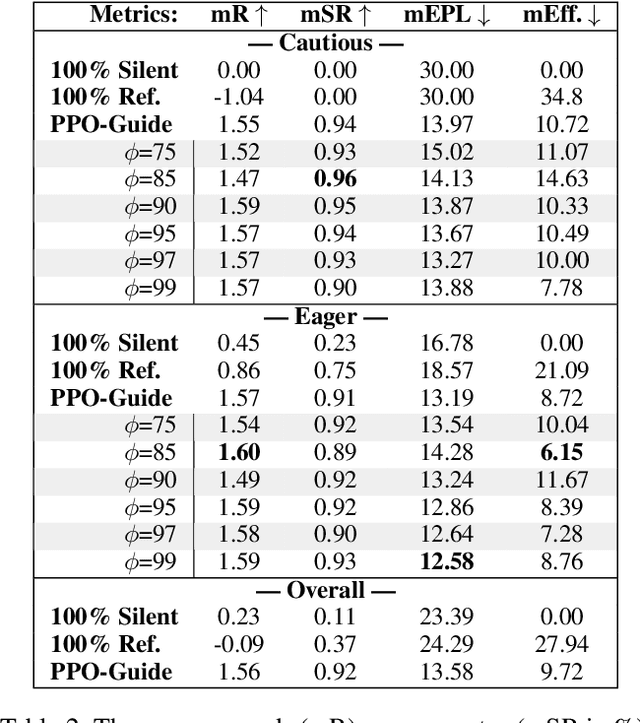

Albrecht and Stone (2018) state that modeling of changing behaviors remains an open problem "due to the essentially unconstrained nature of what other agents may do". In this work we evaluate the adaptability of neural artificial agents towards assumed partner behaviors in a collaborative reference game. In this game success is achieved when a knowledgeable Guide can verbally lead a Follower to the selection of a specific puzzle piece among several distractors. We frame this language grounding and coordination task as a reinforcement learning problem and measure to which extent a common reinforcement training algorithm (PPO) is able to produce neural agents (the Guides) that perform well with various heuristic Follower behaviors that vary along the dimensions of confidence and autonomy. We experiment with a learning signal that in addition to the goal condition also respects an assumed communicative effort. Our results indicate that this novel ingredient leads to communicative strategies that are less verbose (staying silent in some of the steps) and that with respect to that the Guide's strategies indeed adapt to the partner's level of confidence and autonomy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge