Learning Cluster Structured Sparsity by Reweighting

Paper and Code

Oct 11, 2019

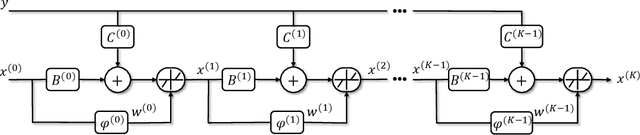

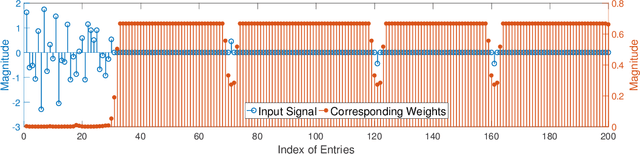

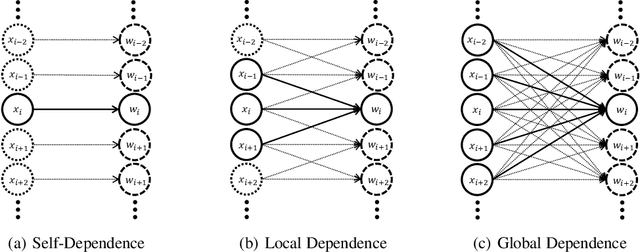

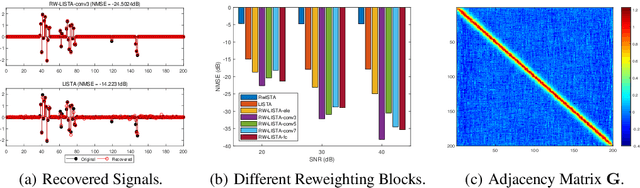

Recently, the paradigm of unfolding iterative algorithms into finite-length feed-forward neural networks has achieved a great success in the area of sparse recovery. Benefit from available training data, the learned networks have achieved state-of-the-art performance in respect of both speed and accuracy. However, the structure behind sparsity, imposing constraint on the support of sparse signals, is often an essential prior knowledge but seldom considered in the existing networks. In this paper, we aim at bridging this gap. Specifically, exploiting the iterative reweighted $\ell_1$ minimization (IRL1) algorithm, we propose to learn the cluster structured sparsity (CSS) by rewegihting adaptively. In particular, we first unfold the Reweighted Iterative Shrinkage Algorithm (RwISTA) into an end-to-end trainable deep architecture termed as RW-LISTA. Then instead of the element-wise reweighting, the global and local reweighting manner are proposed for the cluster structured sparse learning. Numerical experiments further show the superiority of our algorithm against both classical algorithms and learning-based networks on different tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge