Learning Bayesian Nets that Perform Well

Paper and Code

Feb 06, 2013

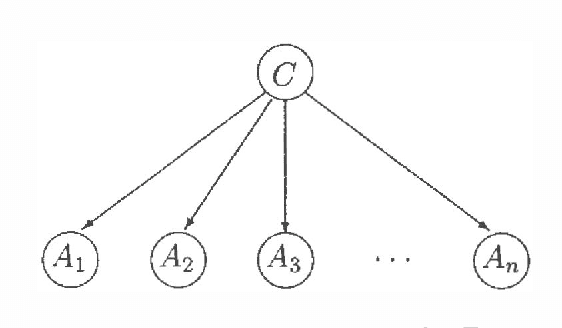

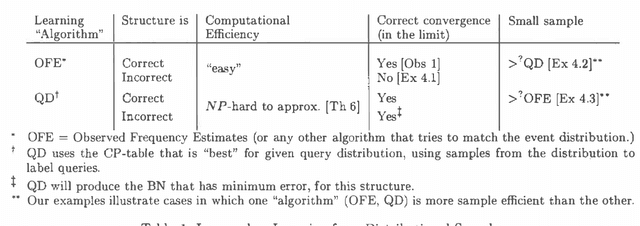

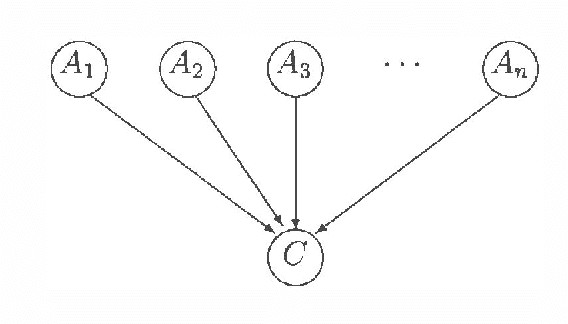

A Bayesian net (BN) is more than a succinct way to encode a probabilistic distribution; it also corresponds to a function used to answer queries. A BN can therefore be evaluated by the accuracy of the answers it returns. Many algorithms for learning BNs, however, attempt to optimize another criterion (usually likelihood, possibly augmented with a regularizing term), which is independent of the distribution of queries that are posed. This paper takes the "performance criteria" seriously, and considers the challenge of computing the BN whose performance - read "accuracy over the distribution of queries" - is optimal. We show that many aspects of this learning task are more difficult than the corresponding subtasks in the standard model.

* Appears in Proceedings of the Thirteenth Conference on Uncertainty in

Artificial Intelligence (UAI1997)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge