Learning as Reinforcement: Applying Principles of Neuroscience for More General Reinforcement Learning Agents

Paper and Code

Apr 20, 2020

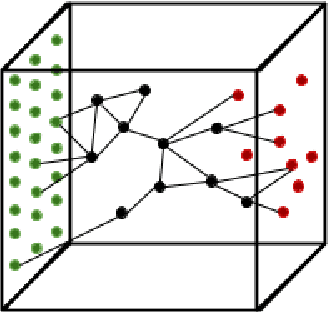

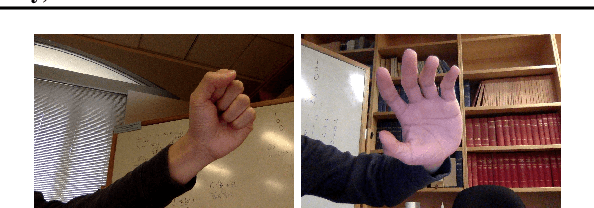

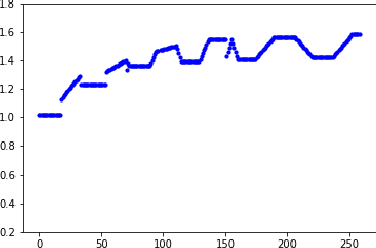

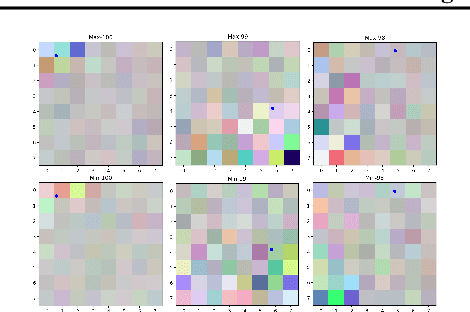

A significant challenge in developing AI that can generalize well is designing agents that learn about their world without being told what to learn, and apply that learning to challenges with sparse rewards. Moreover, most traditional reinforcement learning approaches explicitly separate learning and decision making in a way that does not correspond to biological learning. We implement an architecture founded in principles of experimental neuroscience, by combining computationally efficient abstractions of biological algorithms. Our approach is inspired by research on spike-timing dependent plasticity, the transition between short and long term memory, and the role of various neurotransmitters in rewarding curiosity. The Neurons-in-a-Box architecture can learn in a wholly generalizable manner, and demonstrates an efficient way to build and apply representations without explicitly optimizing over a set of criteria or actions. We find it performs well in many environments including OpenAI Gym's Mountain Car, which has no reward besides touching a hard-to-reach flag on a hill, Inverted Pendulum, where it learns simple strategies to improve the time it holds a pendulum up, a video stream, where it spontaneously learns to distinguish an open and closed hand, as well as other environments like Google Chrome's Dinosaur Game.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge