Learning Activation Functions to Improve Deep Neural Networks

Paper and Code

Apr 21, 2015

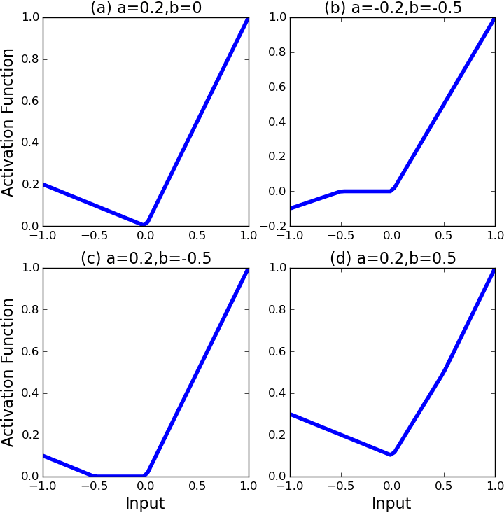

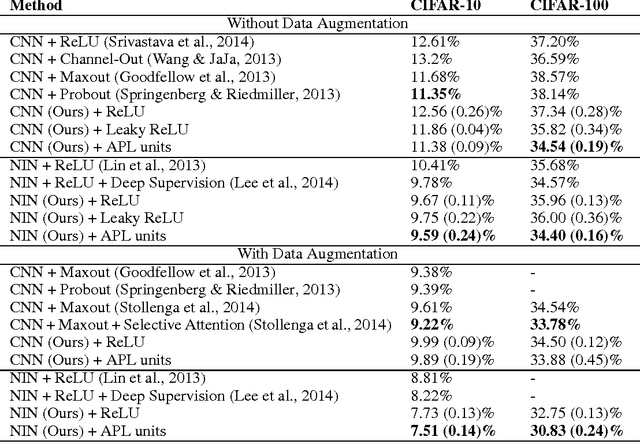

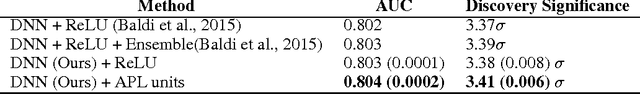

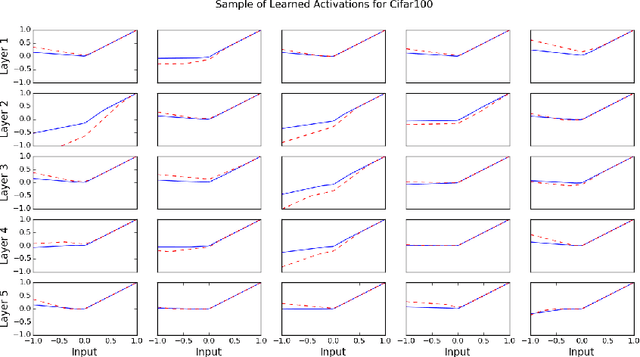

Artificial neural networks typically have a fixed, non-linear activation function at each neuron. We have designed a novel form of piecewise linear activation function that is learned independently for each neuron using gradient descent. With this adaptive activation function, we are able to improve upon deep neural network architectures composed of static rectified linear units, achieving state-of-the-art performance on CIFAR-10 (7.51%), CIFAR-100 (30.83%), and a benchmark from high-energy physics involving Higgs boson decay modes.

* Accepted as a workshop paper contribution at the International

Conference on Learning Representations (ICLR) 2015

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge