Learn to See by Events: RGB Frame Synthesis from Event Cameras

Paper and Code

Dec 05, 2018

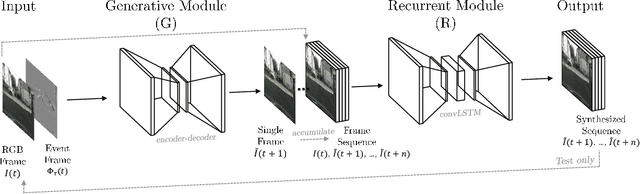

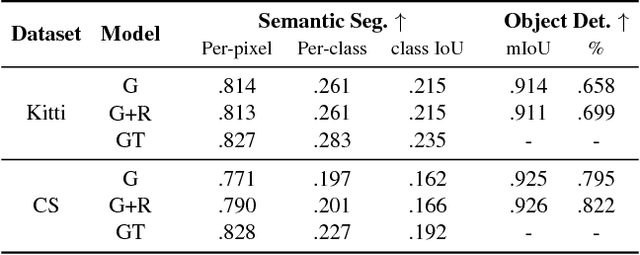

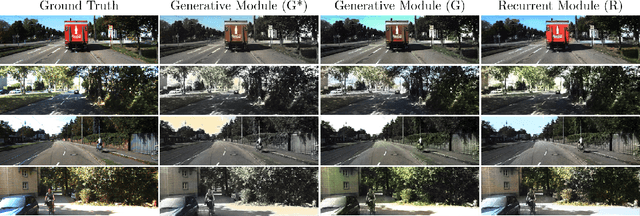

Event cameras are biologically-inspired sensors that gather the temporal evolution of the scene, capturing only pixel-wise brightness variations. Despite having multiple advantages with respect to traditional cameras, their use is still limited due to the difficult intelligibility and restricted usability through traditional vision algorithms. To this aim, we present a framework which exploits the output of event cameras to synthesize RGB frames. In particular, the frame generation relies on an initial or a periodic set of color key-frames and a sequence of intermediate event frames, i.e. gray-level images that integrate the brightness changes captured by the event camera during a short temporal slot. An adversarial architecture combined with a recurrent module is employed for the frame synthesis. Both traditional and event-based datasets are adopted to assess the capabilities of the proposed architecture: pixel-wise and semantic metrics confirm the quality of the synthesized images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge