Lattice-Based Unsupervised Test-Time Adaptation of Neural Network Acoustic Models

Paper and Code

Jun 27, 2019

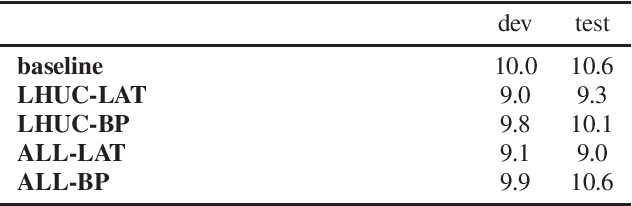

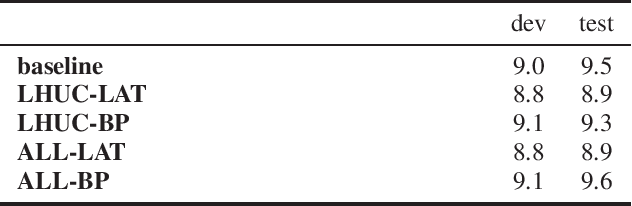

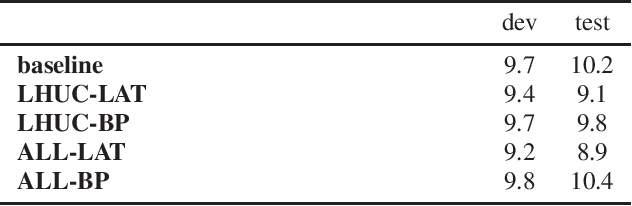

Acoustic model adaptation to unseen test recordings aims to reduce the mismatch between training and testing conditions. Most adaptation schemes for neural network models require the use of an initial one-best transcription for the test data, generated by an unadapted model, in order to estimate the adaptation transform. It has been found that adaptation methods using discriminative objective functions - such as cross-entropy loss - often require careful regularisation to avoid over-fitting to errors in the one-best transcriptions. In this paper we solve this problem by performing discriminative adaptation using lattices obtained from a first pass decoding, an approach that can be readily integrated into the lattice-free maximum mutual information (LF-MMI) framework. We investigate this approach on three transcription tasks of varying difficulty: TED talks, multi-genre broadcast (MGB) and a low-resource language (Somali). We find that our proposed approach enables many more parameters to be adapted without over-fitting being observed, and is successful even when the initial transcription has a WER in excess of 50%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge