Large Scale Question Answering using Tourism Data

Paper and Code

Sep 08, 2019

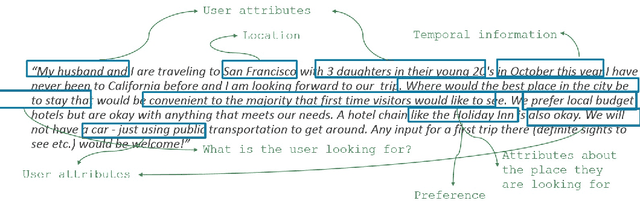

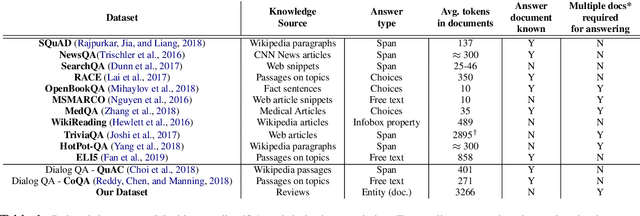

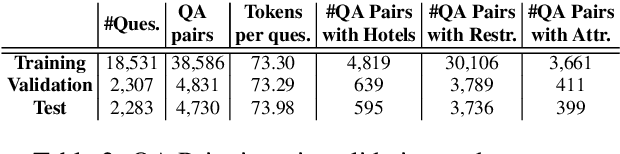

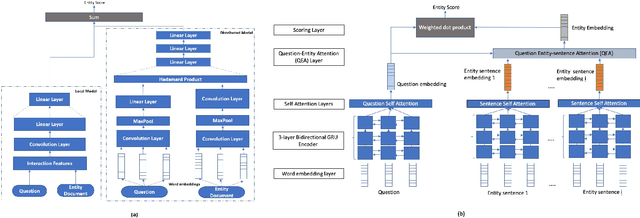

Real world question answering can be significantly more complex than what most existing QA datasets reflect. Questions posed by users on websites, such as online travel forums, may consist of multiple sentences and not everything mentioned in a question may be relevant for finding its answer. Such questions typically have a huge candidate answer space and require complex reasoning over large knowledge corpora. We introduce the novel task of answering entity-seeking recommendation questions using a collection of reviews that describe candidate answer entities. We harvest a QA dataset that contains 48,147 paragraph-sized real user questions from travelers seeking recommendations for hotels, attractions and restaurants. Each candidate answer is associated with a collection of unstructured reviews. This dataset is challenging because commonly used neural architectures for QA are prohibitively expensive for a task of this scale. As a solution, we design a scalable cluster-select-rerank approach. It first clusters text for each entity to identify exemplar sentences describing an entity. It then uses a scalable neural information retrieval (IR) module to subselect a set of potential entities from the large candidate set. A reranker uses a deeper attention-based architecture to pick the best answers from the selected entities. This strategy performs better than a pure IR or a pure attention-based reasoning approach yielding nearly 10% relative improvement in Accuracy@3 over both approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge