LanPose: Language-Instructed 6D Object Pose Estimation for Robotic Assembly

Paper and Code

Oct 20, 2023

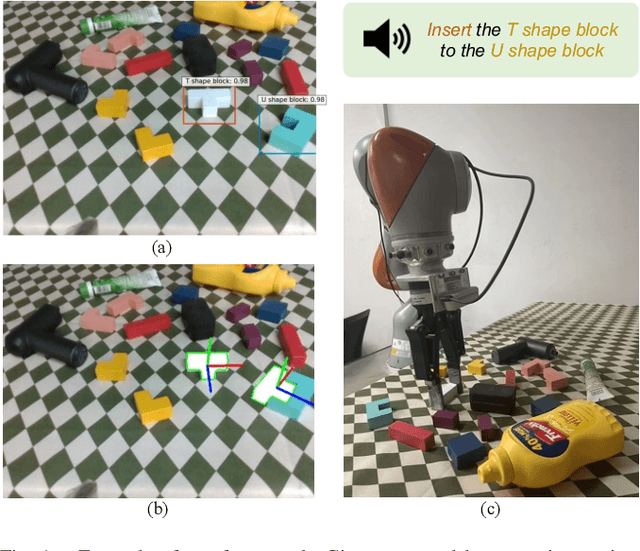

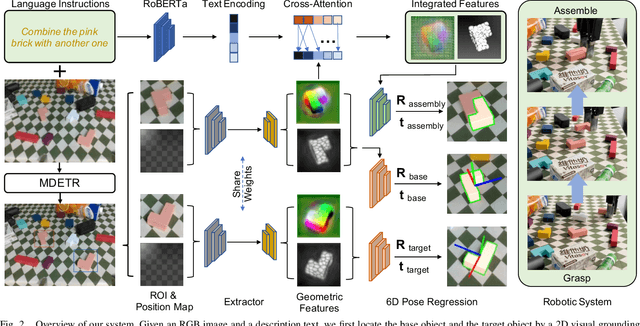

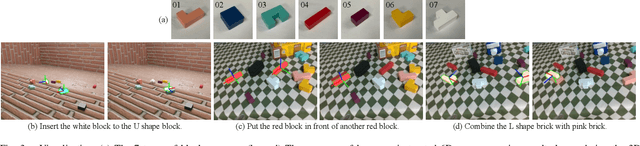

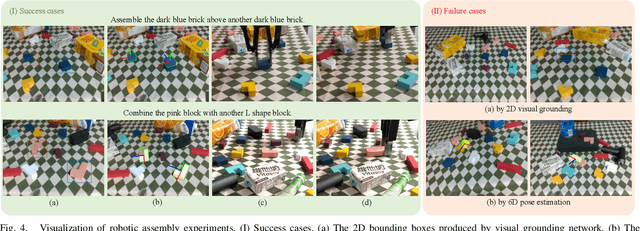

Comprehending natural language instructions is a critical skill for robots to cooperate effectively with humans. In this paper, we aim to learn 6D poses for roboticassembly by natural language instructions. For this purpose, Language-Instructed 6D Pose Regression Network (LanPose) is proposed to jointly predict the 6D poses of the observed object and the corresponding assembly position. Our proposed approach is based on the fusion of geometric and linguistic features, which allows us to finely integrate multi-modality input and map it to the 6D pose in SE(3) space by the cross-attention mechanism and the language-integrated 6D pose mapping module, respectively. To validate the effectiveness of our approach, an integrated robotic system is established to precisely and robustly perceive, grasp, manipulate and assemble blocks by language commands. 98.09 and 93.55 in ADD(-S)-0.1d are derived for the prediction of 6D object pose and 6D assembly pose, respectively. Both quantitative and qualitative results demonstrate the effectiveness of our proposed language-instructed 6D pose estimation methodology and its potential to enable robots to better understand and execute natural language instructions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge