LAGUNA: LAnguage Guided UNsupervised Adaptation with structured spaces

Paper and Code

Nov 23, 2024

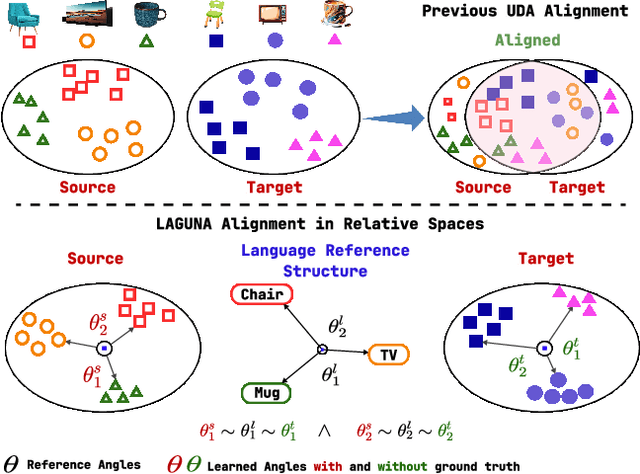

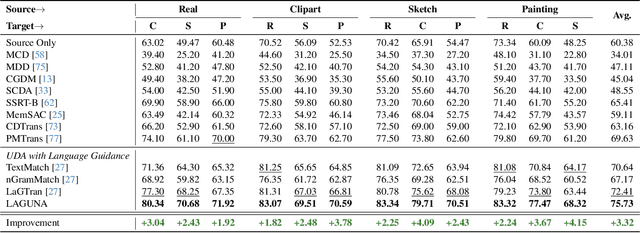

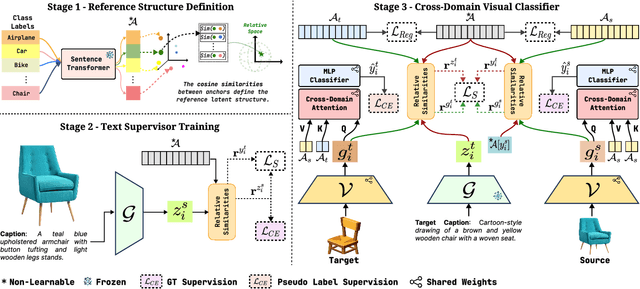

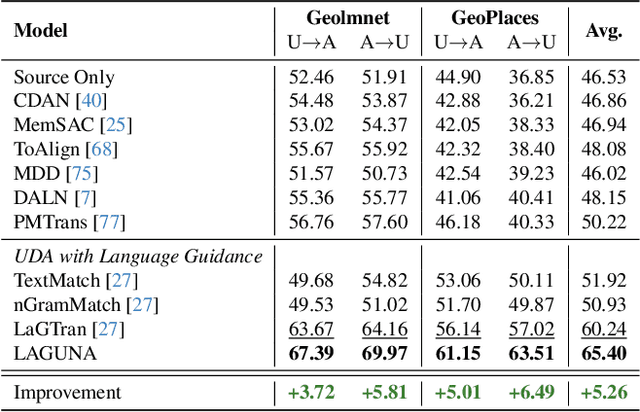

Unsupervised domain adaptation remains a critical challenge in enabling the knowledge transfer of models across unseen domains. Existing methods struggle to balance the need for domain-invariant representations with preserving domain-specific features, which is often due to alignment approaches that impose the projection of samples with similar semantics close in the latent space despite their drastic domain differences. We introduce \mnamelong, a novel approach that shifts the focus from aligning representations in absolute coordinates to aligning the relative positioning of equivalent concepts in latent spaces. \mname defines a domain-agnostic structure upon the semantic/geometric relationships between class labels in language space and guides adaptation, ensuring that the organization of samples in visual space reflects reference inter-class relationships while preserving domain-specific characteristics. %We empirically demonstrate \mname's superiority in domain adaptation tasks across four diverse images and video datasets. Remarkably, \mname surpasses previous works in 18 different adaptation scenarios across four diverse image and video datasets with average accuracy improvements of +3.32% on DomainNet, +5.75% in GeoPlaces, +4.77% on GeoImnet, and +1.94% mean class accuracy improvement on EgoExo4D.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge