Knowledge Sheaves: A Sheaf-Theoretic Framework for Knowledge Graph Embedding

Paper and Code

Oct 07, 2021

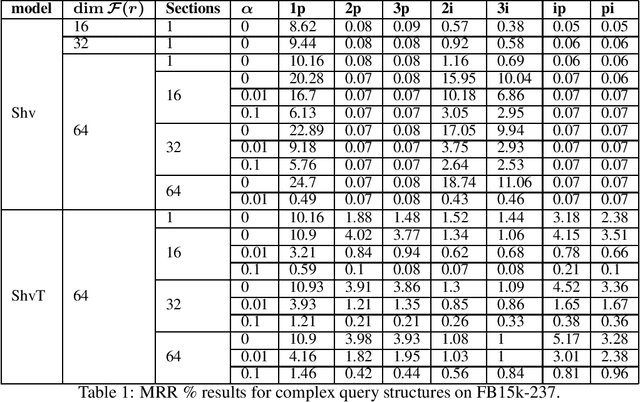

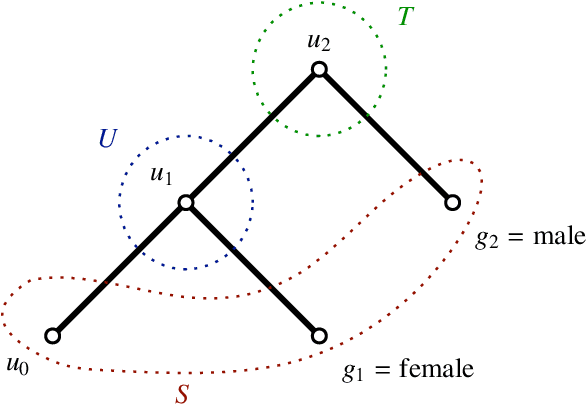

Knowledge graph embedding involves learning representations of entities -- the vertices of the graph -- and relations -- the edges of the graph -- such that the resulting representations encode the known factual information represented by the knowledge graph are internally consistent and can be used in the inference of new relations. We show that knowledge graph embedding is naturally expressed in the topological and categorical language of \textit{cellular sheaves}: learning a knowledge graph embedding corresponds to learning a \textit{knowledge sheaf} over the graph, subject to certain constraints. In addition to providing a generalized framework for reasoning about knowledge graph embedding models, this sheaf-theoretic perspective admits the expression of a broad class of prior constraints on embeddings and offers novel inferential capabilities. We leverage the recently developed spectral theory of sheaf Laplacians to understand the local and global consistency of embeddings and develop new methods for reasoning over composite relations through harmonic extension with respect to the sheaf Laplacian. We then implement these ideas to highlight the benefits of the extensions inspired by this new perspective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge