Knowledge Infused Learning (K-IL): Towards Deep Incorporation of Knowledge in Deep Learning

Paper and Code

Dec 01, 2019

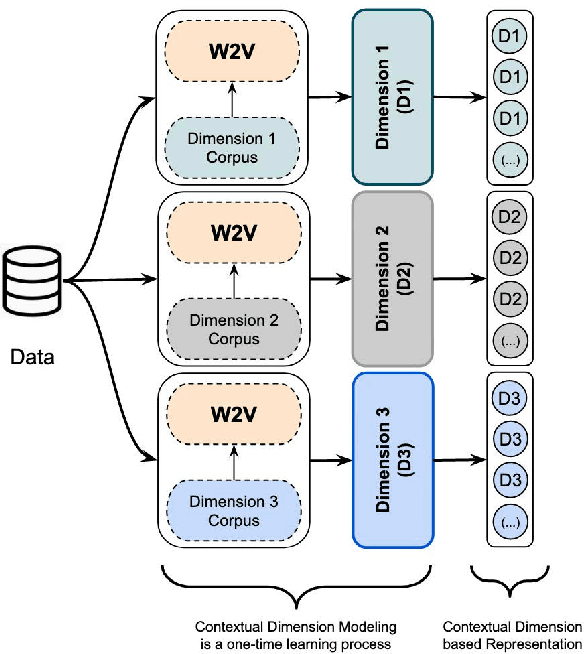

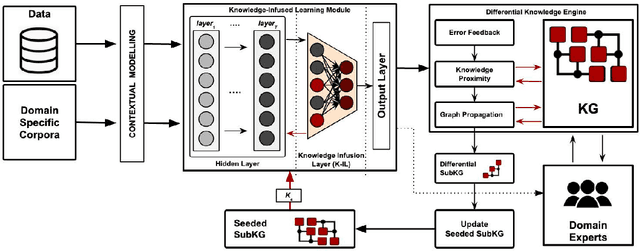

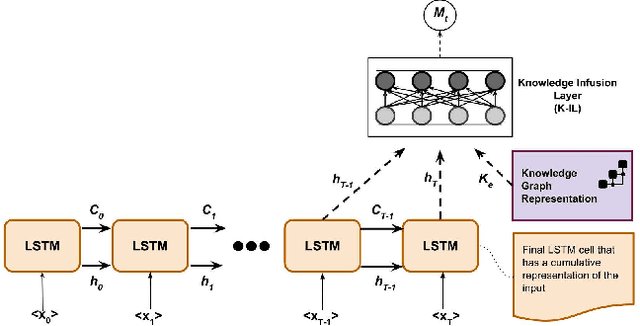

Learning the underlying patterns in the data goes beyond instance-based generalization to some external knowledge represented in structured graphs or networks. Deep Learning (DL) has shown significant advances in probabilistically learning latent patterns in the data using a multi-layered network of computational nodes (i.e. neurons/hidden units). However, with the tremendous amount of training data, uncertainty in generalization on domain-specific tasks, and delta improvement with an increase in complexity of models seem to raise a concern on the features learned by the model. As incorporation of domain specific knowledge will aid in supervising the learning of features for the model, infusion of knowledge from knowledge graphs within hidden layers will further enhance the learning process. Although much work remains, we believe that KGs will play an increasing role in developing hybrid neuro-symbolic intelligent systems (that is bottom up deep learning with top down symbolic computing) as well as in building explainable AI systems for which KGs will provide a scaffolding for punctuating neural computing. In this position paper, we describe our motivation for such hybrid approach and a framework that combines knowledge graph and neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge