Knowledge Distillation in RNN-Attention Models for Early Prediction of Student Performance

Paper and Code

Dec 19, 2024

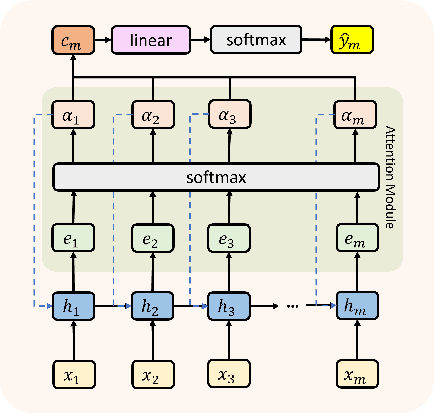

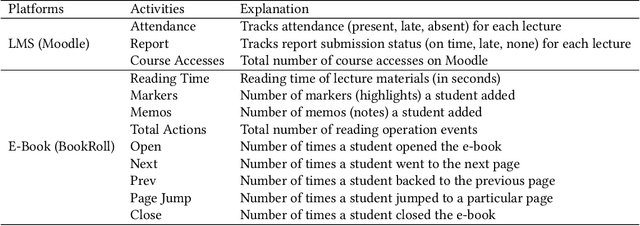

Educational data mining (EDM) is a part of applied computing that focuses on automatically analyzing data from learning contexts. Early prediction for identifying at-risk students is a crucial and widely researched topic in EDM research. It enables instructors to support at-risk students to stay on track, preventing student dropout or failure. Previous studies have predicted students' learning performance to identify at-risk students by using machine learning on data collected from e-learning platforms. However, most studies aimed to identify at-risk students utilizing the entire course data after the course finished. This does not correspond to the real-world scenario that at-risk students may drop out before the course ends. To address this problem, we introduce an RNN-Attention-KD (knowledge distillation) framework to predict at-risk students early throughout a course. It leverages the strengths of Recurrent Neural Networks (RNNs) in handling time-sequence data to predict students' performance at each time step and employs an attention mechanism to focus on relevant time steps for improved predictive accuracy. At the same time, KD is applied to compress the time steps to facilitate early prediction. In an empirical evaluation, RNN-Attention-KD outperforms traditional neural network models in terms of recall and F1-measure. For example, it obtained recall and F1-measure of 0.49 and 0.51 for Weeks 1--3 and 0.51 and 0.61 for Weeks 1--6 across all datasets from four years of a university course. Then, an ablation study investigated the contributions of different knowledge transfer methods (distillation objectives). We found that hint loss from the hidden layer of RNN and context vector loss from the attention module on RNN could enhance the model's prediction performance for identifying at-risk students. These results are relevant for EDM researchers employing deep learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge