Keep Your Friends Close and Your Counterfactuals Closer: Improved Learning From Closest Rather Than Plausible Counterfactual Explanations in an Abstract Setting

Paper and Code

May 11, 2022

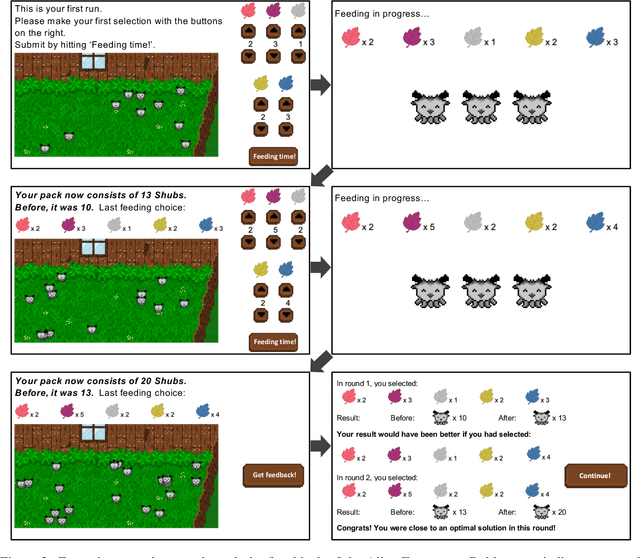

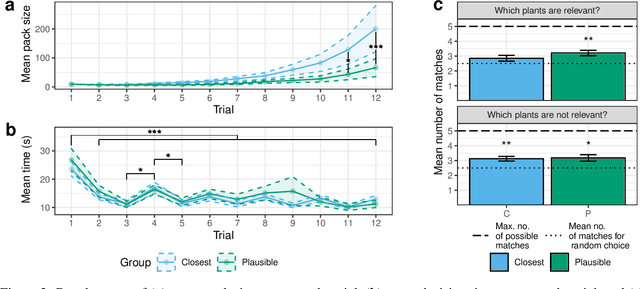

Counterfactual explanations (CFEs) highlight what changes to a model's input would have changed its prediction in a particular way. CFEs have gained considerable traction as a psychologically grounded solution for explainable artificial intelligence (XAI). Recent innovations introduce the notion of computational plausibility for automatically generated CFEs, enhancing their robustness by exclusively creating plausible explanations. However, practical benefits of such a constraint on user experience and behavior is yet unclear. In this study, we evaluate objective and subjective usability of computationally plausible CFEs in an iterative learning design targeting novice users. We rely on a novel, game-like experimental design, revolving around an abstract scenario. Our results show that novice users actually benefit less from receiving computationally plausible rather than closest CFEs that produce minimal changes leading to the desired outcome. Responses in a post-game survey reveal no differences in terms of subjective user experience between both groups. Following the view of psychological plausibility as comparative similarity, this may be explained by the fact that users in the closest condition experience their CFEs as more psychologically plausible than the computationally plausible counterpart. In sum, our work highlights a little-considered divergence of definitions of computational plausibility and psychological plausibility, critically confirming the need to incorporate human behavior, preferences and mental models already at the design stages of XAI approaches. In the interest of reproducible research, all source code, acquired user data, and evaluation scripts of the current study are available: https://github.com/ukuhl/PlausibleAlienZoo

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge