Just One Moment: Inconspicuous One Frame Attack on Deep Action Recognition

Paper and Code

Nov 30, 2020

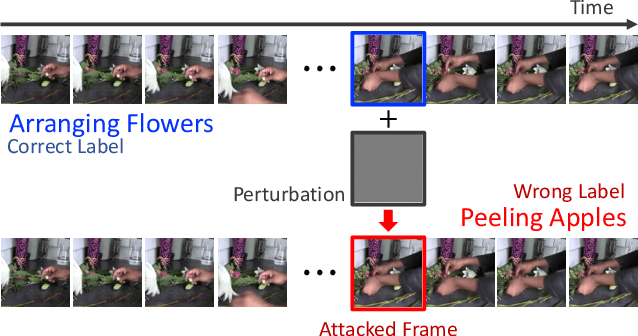

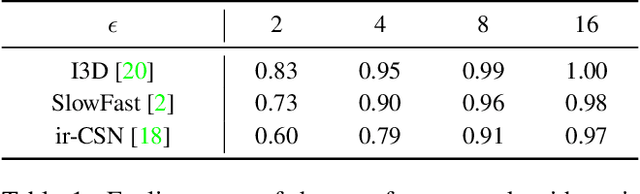

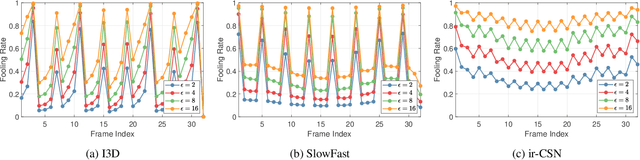

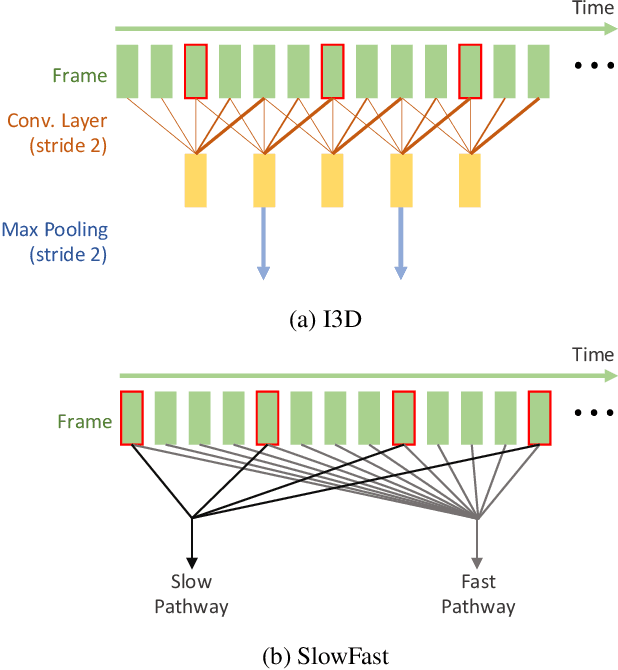

The video-based action recognition task has been extensively studied in recent years. In this paper, we study the vulnerability of deep learning-based action recognition methods against the adversarial attack using a new one frame attack that adds an inconspicuous perturbation to only a single frame of a given video clip. We investigate the effectiveness of our one frame attack on state-of-the-art action recognition models, along with thorough analysis of the vulnerability in terms of their model structure and perceivability of the perturbation. Our method shows high fooling rates and produces hardly perceivable perturbation to human observers, which is evaluated by a subjective test. In addition, we present a video-agnostic approach that finds a universal perturbation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge