Joint Sensing and Task-Oriented Communications with Image and Wireless Data Modalities for Dynamic Spectrum Access

Paper and Code

Dec 21, 2023

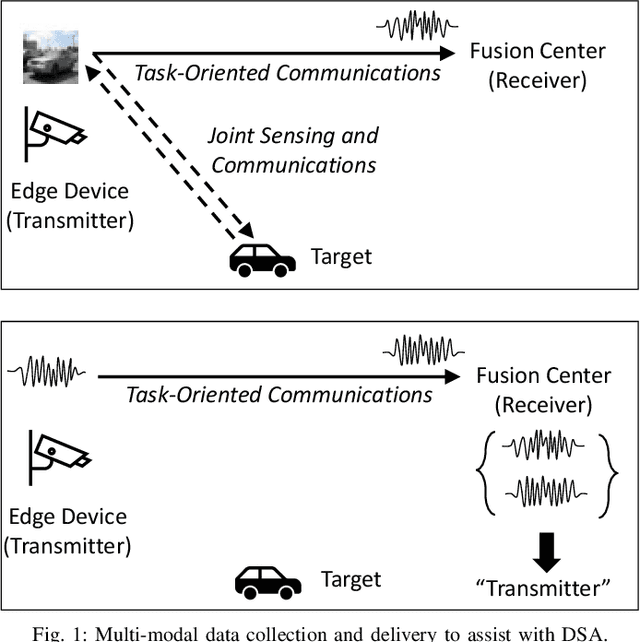

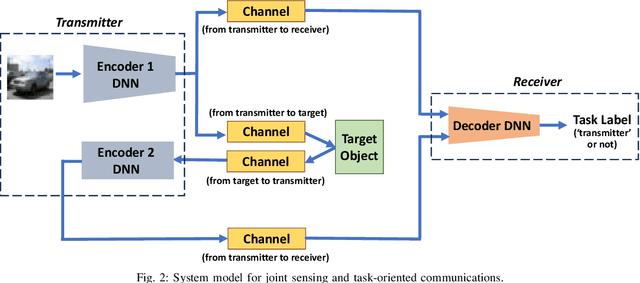

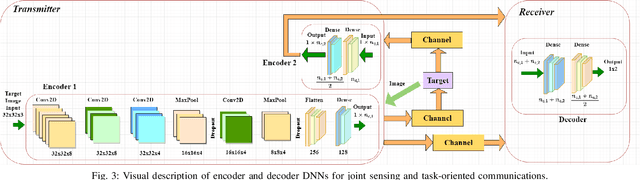

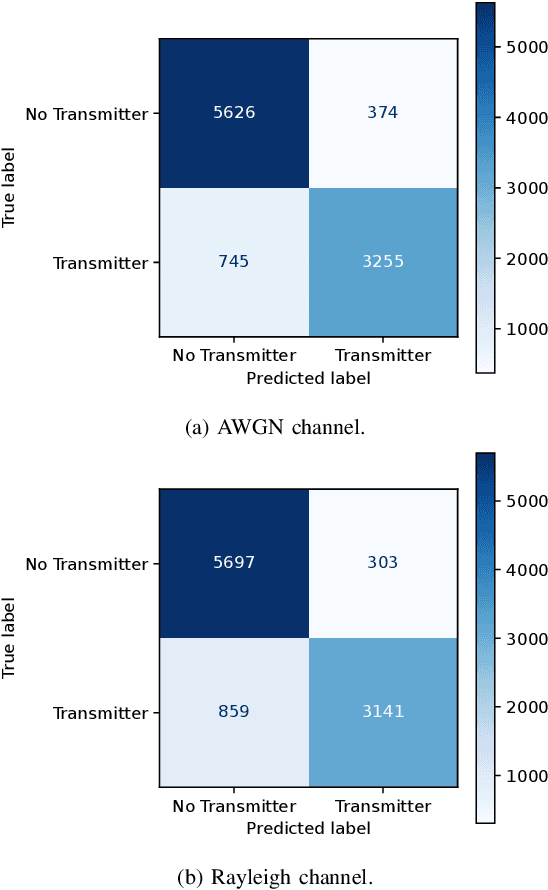

This paper introduces a deep learning approach to dynamic spectrum access, leveraging the synergy of multi-modal image and spectrum data for the identification of potential transmitters. We consider an edge device equipped with a camera that is taking images of potential objects such as vehicles that may harbor transmitters. Recognizing the computational constraints and trust issues associated with on-device computation, we propose a collaborative system wherein the edge device communicates selectively processed information to a trusted receiver acting as a fusion center, where a decision is made to identify whether a potential transmitter is present, or not. To achieve this, we employ task-oriented communications, utilizing an encoder at the transmitter for joint source coding, channel coding, and modulation. This architecture efficiently transmits essential information of reduced dimension for object classification. Simultaneously, the transmitted signals may reflect off objects and return to the transmitter, allowing for the collection of target sensing data. Then the collected sensing data undergoes a second round of encoding at the transmitter, with the reduced-dimensional information communicated back to the fusion center through task-oriented communications. On the receiver side, a decoder performs the task of identifying a transmitter by fusing data received through joint sensing and task-oriented communications. The two encoders at the transmitter and the decoder at the receiver are jointly trained, enabling a seamless integration of image classification and wireless signal detection. Using AWGN and Rayleigh channel models, we demonstrate the effectiveness of the proposed approach, showcasing high accuracy in transmitter identification across diverse channel conditions while sustaining low latency in decision making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge