Joint Representation of Multiple Geometric Priors via a Shape Decomposition Model for Single Monocular 3D Pose Estimation

Paper and Code

May 31, 2019

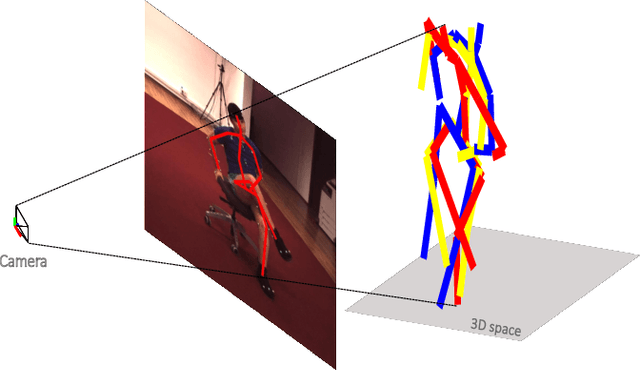

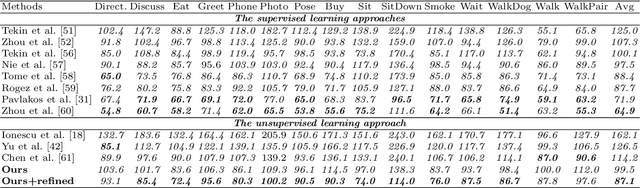

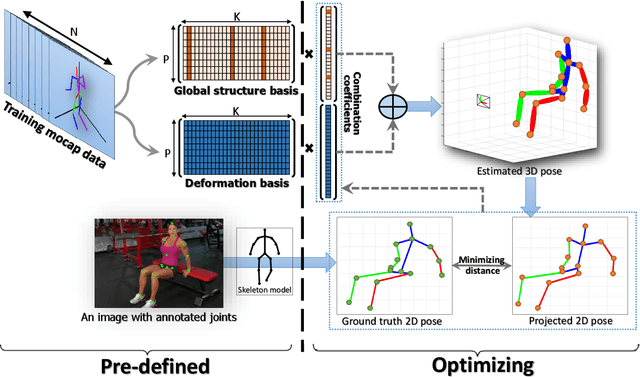

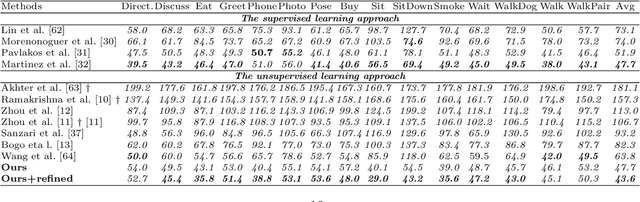

In this paper, we aim to recover the 3D human pose from 2D body joints of a single image. The major challenge in this task is the depth ambiguity since different 3D poses may produce similar 2D poses. Although many recent advances in this problem are found in both unsupervised and supervised learning approaches, the performances of most of these approaches are greatly affected by insufficient diversities and richness of training data. To alleviate this issue, we propose an unsupervised learning approach, which is capable of estimating various complex poses well under limited available training data. Specifically, we propose a Shape Decomposition Model (SDM) in which a 3D pose is considered as the superposition of two parts which are global structure together with some deformations. Based on SDM, we estimate these two parts explicitly by solving two sets of different distributed combination coefficients of geometric priors. In addition, to obtain geometric priors, a joint dictionary learning algorithm is proposed to extract both coarse and fine pose clues simultaneously from limited training data. Quantitative evaluations on several widely used datasets demonstrate that our approach yields better performances over other competitive approaches. Especially, on some categories with more complex deformations, significant improvements are achieved by our approach. Furthermore, qualitative experiments conducted on in-the-wild images also show the effectiveness of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge