Joint Embedding Learning and Low-Rank Approximation: A Framework for Incomplete Multi-view Learning

Paper and Code

Dec 25, 2018

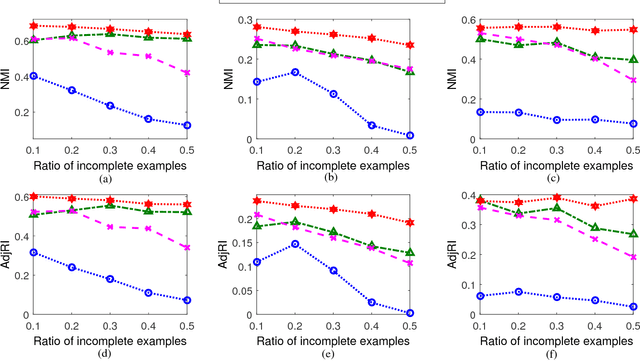

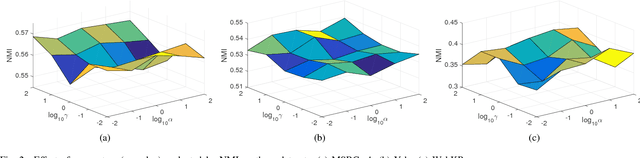

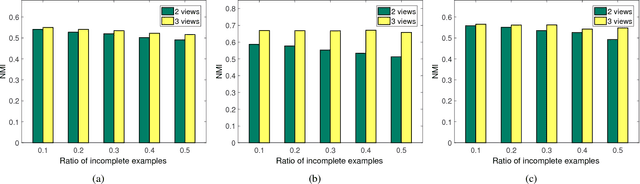

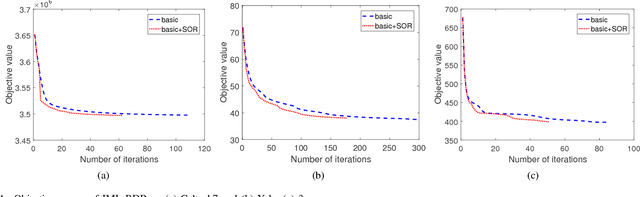

In real-world applications, not all instances in multi-view data are fully represented. To deal with incomplete multi-view data, traditional multi-view algorithms usually throw away the incomplete instances, resulting in loss of available information. To overcome this loss, Incomplete Multi-view Learning (IML) has become a hot research topic. In this paper, we propose a general IML framework for unifying existing IML methods and gaining insight into IML. The proposed framework jointly performs embedding learning and low-rank approximation. Concretely, it approximates the incomplete data by a set of low-rank matrices and learns a full and common embedding by linear transformation. Several existing IML methods can be unified as special cases of the framework. More interestingly, some linear transformation based full-view methods can be adapted to IML directly with the guidance of the framework. This bridges the gap between full multi-view learning and IML. Moreover, the framework can provide guidance for developing new algorithms. For illustration, within the framework, we propose a specific method, termed as Incomplete Multi-view Learning with Block Diagonal Representation (IML-BDR). Based on the assumption that the sampled examples have approximate linear subspace structure, IML-BDR uses the block diagonal structure prior to learn the full embedding, which would lead to more correct clustering. A convergent alternating iterative algorithm with the Successive Over-Relaxation (SOR) optimization technique is devised for optimization. Experimental results on various datasets demonstrate the effectiveness of IML-BDR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge