Invariance-adapted decomposition and Lasso-type contrastive learning

Paper and Code

Oct 13, 2022

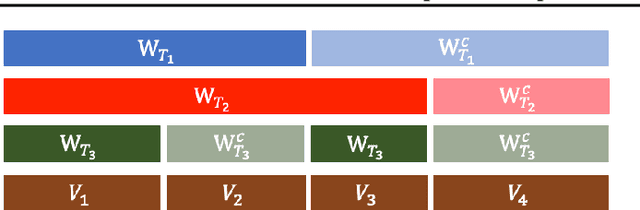

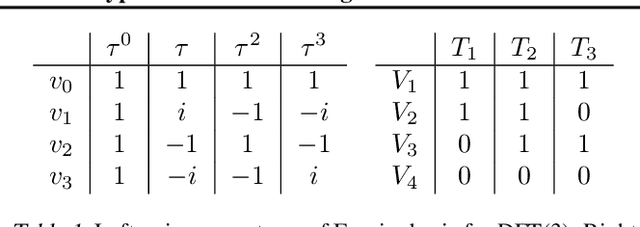

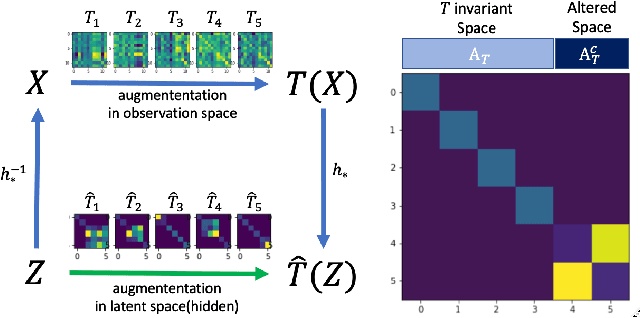

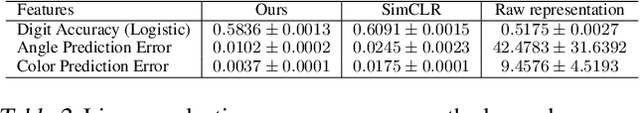

Recent years have witnessed the effectiveness of contrastive learning in obtaining the representation of dataset that is useful in interpretation and downstream tasks. However, the mechanism that describes this effectiveness have not been thoroughly analyzed, and many studies have been conducted to investigate the data structures captured by contrastive learning. In particular, the recent study of \citet{content_isolate} has shown that contrastive learning is capable of decomposing the data space into the space that is invariant to all augmentations and its complement. In this paper, we introduce the notion of invariance-adapted latent space that decomposes the data space into the intersections of the invariant spaces of each augmentation and their complements. This decomposition generalizes the one introduced in \citet{content_isolate}, and describes a structure that is analogous to the frequencies in the harmonic analysis of a group. We experimentally show that contrastive learning with lasso-type metric can be used to find an invariance-adapted latent space, thereby suggesting a new potential for the contrastive learning. We also investigate when such a latent space can be identified up to mixings within each component.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge