Intrinsic Motivation in Object-Action-Outcome Blending Latent Space

Paper and Code

Aug 26, 2020

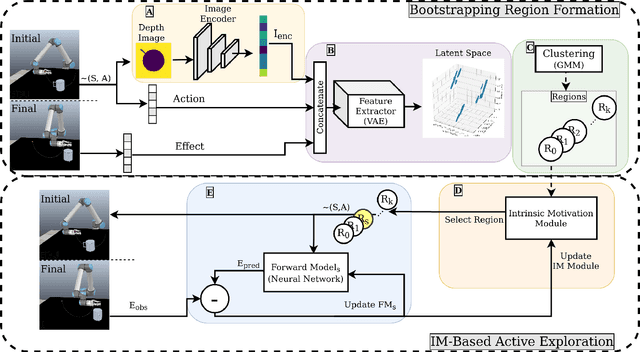

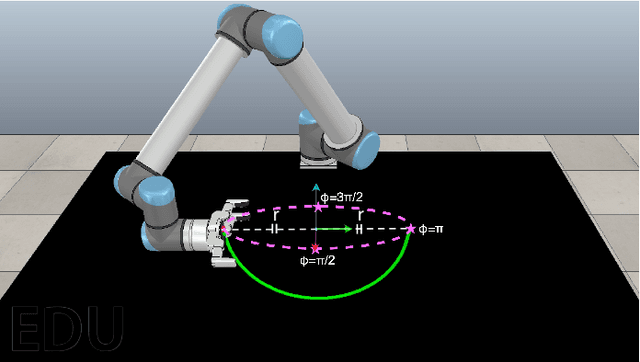

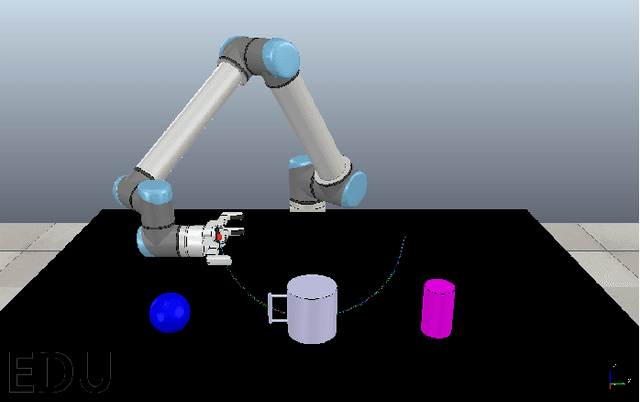

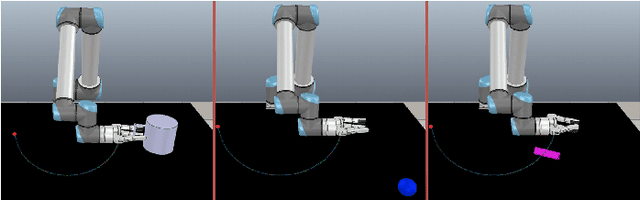

One effective approach for equipping artificial agents with sensorimotor skills is to use self-exploration. To do this efficiently is critical as time and data collection are costly. In this study, we propose an exploration mechanism that blends action, object, and action outcome representations into a latent space, where local regions are formed to host forward model learning. The agent uses intrinsic motivation to select the forward model with the highest learning progress to adapt at a given exploration step. This parallels how infants learn, as high learning progress indicates that the learning problem is neither too easy nor too difficult in the selected region. The proposed approach is validated with a simulated robot in a table-top environment. The robot interacts with different kinds of objects using a set of parameterized actions and learns the outcomes of these interactions. With the proposed approach, the robot organizes its own curriculum of learning as in existing intrinsic motivation approaches and outperforms them in terms of learning speed. Moreover, the learning regime demonstrates features that partially match infant development, in particular, the proposed system learns to predict grasp action outcomes earlier than that of push action.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge