Intrinsic Calibration of Depth Cameras for Mobile Robots using a Radial Laser Scanner

Paper and Code

Jul 03, 2019

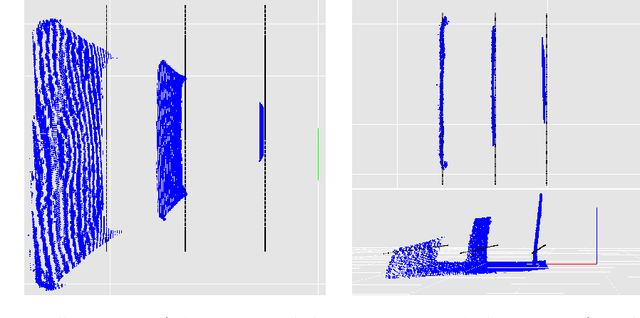

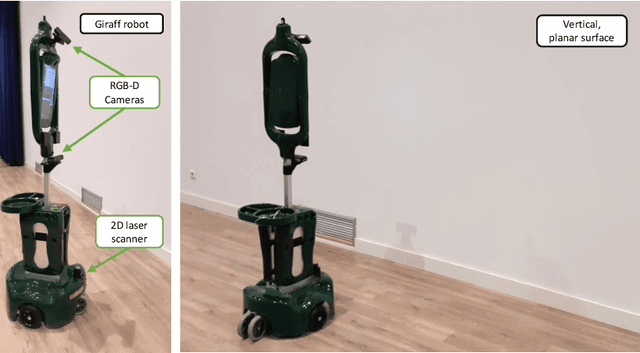

Depth cameras, typically in RGB-D configurations, are common devices in mobile robotic platforms given their appealing features: high frequency and resolution, low price and power requirements, among others. These sensors may come with significant, non-linear errors in the depth measurements that jeopardize robot tasks, like free-space detection, environment reconstruction or visual robot-human interaction. This paper presents a method to calibrate such systematic errors with the help of a second, more precise range sensor, in our case a radial laser scanner. In contrast to what it may seem at first, this does not mean a serious limitation in practice since these two sensors are often mounted jointly in many mobile robotic platforms, as they complement well each other. Moreover, the laser scanner can be used just for the calibration process and get rid of it after that. The main contributions of the paper are: i) the calibration is formulated from a probabilistic perspective through a Maximum Likelihood Estimation problem, and ii) the proposed method can be easily executed automatically by mobile robotic platforms. To validate the proposed approach we evaluated for both, local distortion of 3D planar reconstructions and global shifts in the measurements, obtaining considerably more accurate results. A C++ open-source implementation of the presented method has been released for the benefit of the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge