Interpreting Predictive Process Monitoring Benchmarks

Paper and Code

Dec 22, 2019

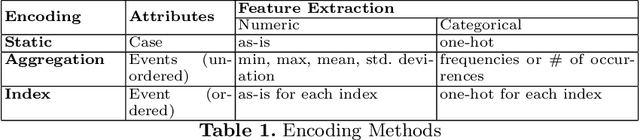

Predictive process analytics has recently gained significant attention, and yet its successful adoption in organisations relies on how well users can trust the predictions of the underlying machine learning algorithms that are often applied and recognised as a `black-box'. Without understanding the rationale of the black-box machinery, there will be a lack of trust in the predictions, a reluctance to use the predictions, and in the worse case, consequences of an incorrect decision based on the prediction. In this paper, we emphasise the importance of interpreting the predictive models in addition to the evaluation using conventional metrics, such as accuracy, in the context of predictive process monitoring. We review existing studies on business process monitoring benchmarks for predicting process outcomes and remaining time. We derive explanations that present the behaviour of the entire predictive model as well as explanations describing a particular prediction. These explanations are used to reveal data leakages, assess the interpretability of features used by the model, and the degree of the use of process knowledge in the existing benchmark models. Findings from this exploratory study motivate the need to incorporate interpretability in predictive process analytics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge