Interpreting Deep Neural Networks with Relative Sectional Propagation by Analyzing Comparative Gradients and Hostile Activations

Paper and Code

Dec 12, 2020

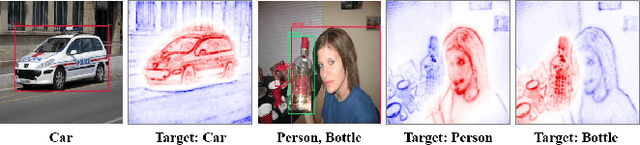

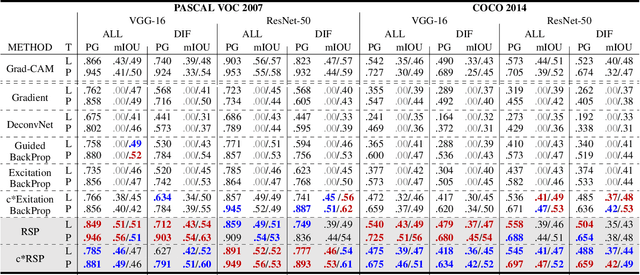

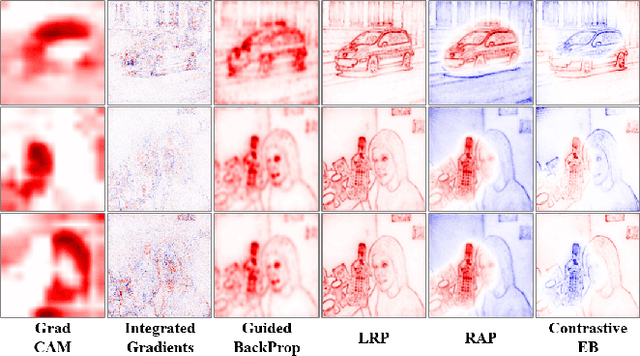

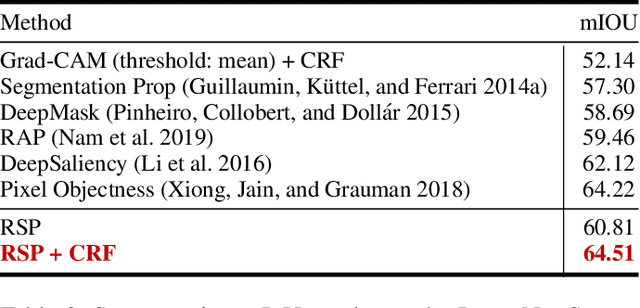

The clear transparency of Deep Neural Networks (DNNs) is hampered by complex internal structures and nonlinear transformations along deep hierarchies. In this paper, we propose a new attribution method, Relative Sectional Propagation (RSP), for fully decomposing the output predictions with the characteristics of class-discriminative attributions and clear objectness. We carefully revisit some shortcomings of backpropagation-based attribution methods, which are trade-off relations in decomposing DNNs. We define hostile factor as an element that interferes with finding the attributions of the target and propagate it in a distinguishable way to overcome the non-suppressed nature of activated neurons. As a result, it is possible to assign the bi-polar relevance scores of the target (positive) and hostile (negative) attributions while maintaining each attribution aligned with the importance. We also present the purging techniques to prevent the decrement of the gap between the relevance scores of the target and hostile attributions during backward propagation by eliminating the conflicting units to channel attribution map. Therefore, our method makes it possible to decompose the predictions of DNNs with clearer class-discriminativeness and detailed elucidations of activation neurons compared to the conventional attribution methods. In a verified experimental environment, we report the results of the assessments: (i) Pointing Game, (ii) mIoU, and (iii) Model Sensitivity with PASCAL VOC 2007, MS COCO 2014, and ImageNet datasets. The results demonstrate that our method outperforms existing backward decomposition methods, including distinctive and intuitive visualizations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge